Sexual harms against children occur online every day. Knowing the language bad actors use when committing this behavior — such as soliciting child sexual abuse material (CSAM) — can be a crucial first step in combating these harms. When CSAM keywords pop up in content moderation, they can signal to trust and safety teams the presence of these child safety risks.

That’s why, in our efforts to help more digital platforms begin to check for discussions and behavior around CSAM, Thorn offers a CSAM Keyword Hub — a collection of more than 37,000 words and phrases related to CSAM and child sexual exploitation in multiple languages.

The Hub is completely free and provides generative AI and content-hosting platforms with a quick and easy starting point for implementing a content moderation process that looks for CSAM and child sexual exploitation-related text.

For teams who’ve never scanned for CSAM behavior, the Hub’s word lists can help provide an initial picture of the size of the problem on their platform. And for those who have a CSAM detection process, the Hub can help increase the effectiveness or precision of current moderation tools.

The Hub’s powerful collection of words and phrases were contributed by leading digital platforms around the world.

Using the Keyword Hub to monitor for CSAM

The CSAM Keyword Hub contains lists of identified keywords or phrases associated with policy-violating activities around CSAM and child sexual exploitation — such as requesting, soliciting and trading CSAM, and soliciting, grooming, and sextorting minors. Platforms that offer a chat function can use these lists for various efforts:

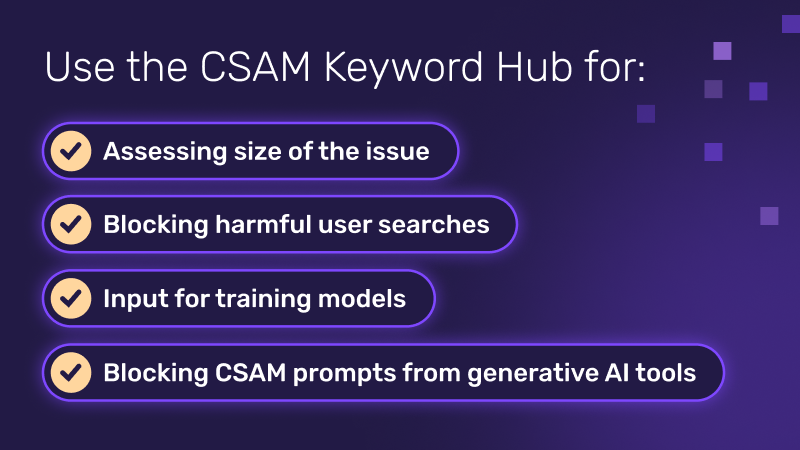

Assessing size of the issue: Content moderation teams can use the lists to get an initial scope of the issue — a free first step to identifying whether CSAM activity is occurring on their platform, and the size of the problem.

Blocking harmful user searches: The lists can be integrated into moderation tools to block specific keywords used to search for CSAM content. The breadth of language and queries used by abusers may be far broader than Trust and Safety teams suspect. Child sexual perpetrators are creative and their targets aren’t always obvious.

Input for training models: For companies with machine learning models, the Keyword Hub can help kick start their training with relevant terms. These lists shouldn’t be used exclusively, but they provide a robust set of keywords to augment other inputs.

Blocking CSAM prompts and responses from generative AI tools: The Hub can also help platforms ensure their generative AI products aren’t misused. Today, bad actors use gen AI tools to scale child sexual abuse and exploitation. Fortifying a platform’s AI tools against such acts is paramount to increasing child safety online and reducing CSAM and other online harms.

Adaptable to industries and platforms

The CSAM Keyword Hub offers diverse languages and can be easily adapted into existing content moderation workflows, allowing platforms to expand their detection efforts across multiple languages.

Teams can also turn on and off keyword sets, focusing on those that are relevant to their industry, platform, and product offering. Thorn subject matter experts created tiers to categorize keywords into different actionability levels with Tier 1 being the most actionable and Tier 5 being the least actionable. Keywords were then categorized using machine learning models into these tiers indicating how likely they are to produce actionable policy violations.

A complement to other tools and strategies

Hosting a vast collection of keyword lists, the Hub serves as a foundation for platforms to build their efforts against CSAM and child sexual exploitation. While keyword matches are exact, the results require further investigation for context, and additional strategies to begin to tackle the CSAM issue.

That’s when a solution like Safer comes in. Enabling teams to detect CSAM and child sexual exploitation at scale, Safer uses proven hashing-and-matching technologies and state-of-the-art classifiers powered by predictive AI.

Yet through the free Keyword Hub, we hope to lower the barrier to protecting children online, supporting digital platforms wherever they may be in their CSAM assessment journey.

At Thorn, we’re committed to empowering platforms to proactively address these abuses — by providing the tools and resources they need to combat sexual harms against children online.