In today's digital landscape, trust and safety teams face an ever-growing challenge: protecting users from child sexual abuse material (CSAM) and exploitation. User-generated content and messaging features have made it easier than ever for bad actors to create and share CSAM online. By messaging a child or with just the click of a button, perpetrators can potentially obtain or upload CSAM, or even sextort a child — leaving platforms vulnerable to hosting this criminal content and activity.

That’s why in 2019, Thorn launched Safer to help protect content-hosting platforms and their users from the risks of CSAM. Today, we're excited to announce a new expansion of Safer Predict’s child sexual exploitation (CSE) text detection with the addition of a “grooming” label.

The power of AI to protect platforms

Our core Safer solution, now called Safer Match, offers a technology called hashing-and-matching to detect known CSAM — material that’s been reported but continues to circulate online. To date, Safer has matched over 3 million CSAM files, helping platforms address this content and create safer online environments.

Safer Predict takes our mission even further. This AI-driven solution not only detects new and unreported CSAM images and videos, but also identifies potentially harmful conversations that include or could lead to child sexual exploitation. Now, it classifies grooming behavior and language that can indicate potential early-stage sexual exploitation or abuse of a minor. When detected, the model applies a “grooming” label and confidence score to each message, helping trust and safety teams prioritize, flag, or route conversations for review and intervention.

By leveraging state-of-the-art machine learning models, Safer Predict empowers trust and safety teams to:

- Cast a wider net for CSAM and CSE detection

- Identify text-based harms, including grooming, discussions of sextortion, self-generated CSAM, and potential offline exploitation

- Scale detection capabilities efficiently

To understand the impact of Safer Predict’s new capabilities, particularly around text and grooming, it helps to grasp the scope of child sexual exploitation online.

The growing challenge of online child exploitation

Child sexual abuse and exploitation is rising online at alarming rates:

- Thorn’s research shows that 40% of youth aged 9–17 have been approached online by someone trying “to befriend and manipulate” them — a grooming behavior that can indicate or lead to sexual exploitation. In the San Francisco Bay Area alone, that’s more than 325,000 children at risk.

- In June 2024, NPR released a series of stories highlighting the dramatic rise in online sextortion targeting children, in which bad actors coerce kids into sharing nudes and then extort them for financial gains. Thorn’s research confirms this devastating trend.

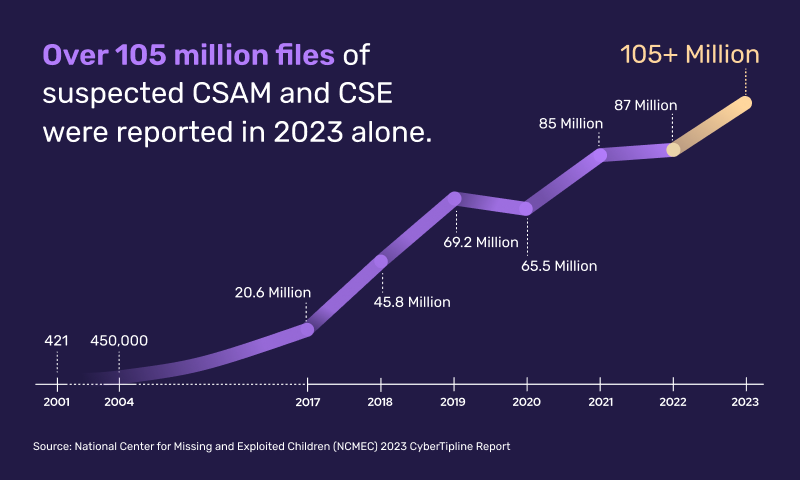

- In April 2024, the National Center for Missing and Exploited Children (NCMEC) released its 2023 CyberTipline Report, revealing that over 105 million files of suspected CSAM and CSE were reported last year alone.

- And in January 2024, U.S. Senators demanded tech executives take action against the devastating rise of child sexual abuse happening on their platforms.

The reality is, bad actors are misusing social and content-hosting platforms to exploit children and to spread CSAM faster than ever. They also take advantage of new technologies like AI to rapidly scale their malicious efforts.

Hashing-and-matching is critical to tackling this issue, but the technology doesn’t detect text-based exploitation. Nor does it detect newly generated CSAM, which can represent a child in active abuse. Both text and novel CSAM represent high-stakes situations — detecting them can provide a greater opportunity for platforms to intervene when an active abuse situation may be occurring.

Safer Predict’s predictive AI technologies allow platforms to detect these harms occurring on their platforms. In addition to protecting a company’s reputational risk, mitigating these threats protects the safety of platform users – and children, whether or not they’re the intended audience.

AI models built on trusted data

While it seems AI is everywhere these days, not all models are created equally. When it comes to AI, quality data matters, especially for detecting CSAM and CSE.

Thorn’s machine learning CSAM classification model was trained in part using trusted data from the National Center for Missing and Exploited Children (NCMEC) CyberTipline. In contrast, broader moderation tools designed for various kinds of harm might simply use age recognition data combined with adult porngraphy, which differs drastically from CSAM content. By training Safer Predict’s models on confirmed CSAM and real conversations, the models are able to predict the likelihood that images and videos contain CSAM and or that messages contain text that’s related to child sexual exploitation.

Safer Predict’s text detection models are trained on messages:

- Discussing sextortion

- Asking for, transacting in, and sharing CSAM

- Asking for a minor’s self-generated sexual content, as well as minors discussing their own self-generated content

- Discussing access to and sexually harming children in an offline setting

Within grooming-related text, high-priority indicators often include behaviors such as:

- Planning or suggesting offline meetings

- Encouraging self-harm or emotional dependency

- Developing romantic or sexual relationships with minors

- Soliciting personal information or images

- Moving conversations to less moderated platforms

Together, Safer Match and Safer Predict provide comprehensive CSAM and CSE detection, offering not only the world’s largest database of aggregate hashes to match against, but also the power of AI to detect new and unreported CSAM — and now text-based child sexual exploitation.

Scalable solution tailored to your business

As the amount of content on platforms grows, trust and safety teams must increase the scale and efficiency of their monitoring to protect their platforms, all while maintaining moderators’ wellbeing. No easy feat. But Safer’s solutions empower teams to do just that.

Safer Predict offers highly customizable workflows, providing flexibility as a platform’s needs change. Teams can prioritize and escalate content by setting precision levels, and leverage provided labels to categorize content. Using multiple labels at once, or “stacking” labels, allows teams to hone-in on high-risk accounts.

Safer Predict may be deployed in two ways: Self-hosted offers greater control over your data and how the solution integrates into your workflows, while Thorn-hosted allows teams to address additional content as needs change and requires little engineering resources. Both options scale as your business does.

Partnering for a safer internet

Safer Predict, like Safer Match, was created by the world’s largest team of engineers and data scientists dedicated exclusively to building technology that combats child sexual abuse and exploitation online. As new threats emerge, our team works hard to react — such as combating the rise in sextortion threats with our text-based detection solution.

With Safer Predict, we're offering trust and safety teams a powerful new tool in the ongoing effort to create safer online spaces for all users, especially children.

Ready to enhance your platform's protection against novel CSAM and text-based child exploitation? Learn more about how Safer Predict can support your trust and safety efforts.