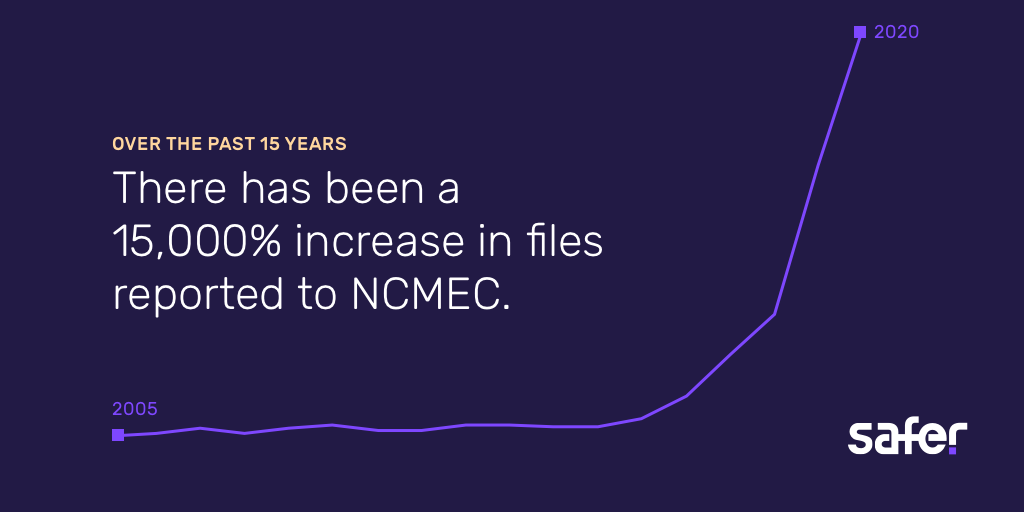

In 2004, the National Center for Missing & Exploited Children (NCMEC) reviewed roughly 450,000 child sexual abuse material, or CSAM, files. By 2019 that figure had exploded exponentially to nearly 70 million, an increase of over 15,000% — that’s more than 1.3 million pieces of CSAM every week.

It’s extremely difficult, it’s pervasive and it’s a problem that’s only continuing to grow.

In 2018 the New York Times published a groundbreaking four-part series on the rise and spread of CSAM online. As reported in that series, NCMEC was at the time only reviewing 40 million pieces of CSAM per year. Just a year later that figure had jumped by more than 53%. Now, as internet bandwidth continues to improve and new ways of connecting people digitally increase, CSAM video is on the rise. Video now rivals imagery as the most reported CSAM format.

This is one of the dark sides to the explosive growth of the internet over the last 20+ years. As recently as the ‘90s, CSAM production and trading networks were scattered and decentralized, keeping the issue to a minimum. But the internet has made it easier for abusers to share child sexual abuse material within communities that can flourish on the same platforms we all use everyday.

The result? That 15,000% explosion in CSAM files reported over the last 15 years.

And, like everything else online, that’s not the end of it. This content often gets shared widely after it is posted, recirculating for years, perpetuating the abuse and re-traumatizing victims.

Part of the problem is the fact that file sharing and user-generated content are foundational to the internet as it exists today. Online life is defined by the interactions we have on platforms like Facebook, the information we are able to find via simple Google searches and the open sharing and access to content that we enjoy on sites like YouTube and Amazon.

As a result, it’s not just image and video sharing platforms that are susceptible to the trading and hosting of this content, but any platform with an upload button. From what we know, CSAM exists in every corner of the digital world.

Solutions for detecting CSAM are often inconsistent, expensive, and have to work across siloed sets of data. Proactive and comprehensive detection is a major technical challenge for most platforms.

But for the companies behind these platforms, identifying, reporting and removing CSAM is a critical step in disrupting the cycle of trauma for survivors. Taking a proactive approach to addressing this issue through timely and thorough reporting – with the full understanding that CSAM is likely present on your platform whether you know it or not – may help to accelerate the identification of victims, bring abusers and communities that share CSAM to justice and ensure that all of our online communities are kept safe for kids.

Technology has a solution

It can feel overwhelming, especially for the engineers, Trust & Safety teams, policy managers and many others at tech companies who are working on the front lines to keep CSAM off of their platforms. But the truth is you can make a real difference in this fight, and with the right tools, it isn’t as difficult as it sounds. The proactive detection of CSAM moves the needle on this mission, reducing harm for child victims and survivors, as well as ensuring online communities and platforms are protected from viewing abuse content.

Safety tech, an emerging category of technology solutions dedicated to protecting online communities and reducing the offline harm misused technology can create, has been gaining widespread attention as more of our lives happen in digital spaces. Increased investment in digital trust and safety tools allow online platforms to augment manual processes, mitigate risk and reallocate their team’s time for tasks that require human intervention. Accessing technology and expertise for proactive CSAM detection allows companies to better manage their risk without having to add headcount or expose their teams to this material.

At a high level, these tools work by matching hashes of known CSAM with content found on platforms. When a match is found that content is flagged for further review based on existing company policies and processes. Confirmed CSAM is reported to the National Center for Missing and Exploited Children (NCMEC) and added to the growing hash sets from various sources for comparison against potential CSAM.

This is what proactive CSAM prevention looks like in practice.

It’s about mitigating exposure to harmful content to one’s users and employees and vastly reducing the opportunity for abuse content to go viral. By proactively looking for and removing this material from their platforms, content-hosting platforms are uniquely positioned to help build the ecosystem’s shared knowledge, building a holistic system that will prevent future CSAM from ever making it into circulation.

After all, this isn’t a problem that’s impacting just one content site or one segment of the industry. CSAM affects us all, and we now have the tools to better address and prevent the threat.

Safer + AWS

Safer, built by Thorn, is the first holistic tool to help engineers and Trust & Safety teams proactively identify, remove and report CSAM on their platforms at scale. It integrates with internal systems and existing processes, or can be customized to meet your unique needs, but the process remains the same.

And it’s as powerful as it is accessible.

Safer matches against a growing dataset of over 10 million hashes across multiple sources, leveraging machine learning to deploy a high-performance CSAM classifier that can detect potentially new and unidentified CSAM in real-time.

It also further builds out the hash list datasets that all safety tech providers are using. You’ll be contributing in a tangible way to the broader movement to eliminate CSAM from the internet and defend children from sexual abuse. And we need you in this — one company, or a handful of companies, can’t do it alone. We won’t end this epidemic until every platform with an upload button is proactively identifying, removing, and reporting child sexual abuse material.

And, starting today, keeping kids, and your community, safer online just got easier.

Safer is now available on the AWS Marketplace.

That means that content-hosting platforms of all shapes and sizes, built for any purpose, can now proactively and automatically help to end this epidemic. No matter what other balls your team has in the air – maybe you’re bouncing between video calls, balancing multiple product priorities, or working to hone your sales and marketing processes – Safer will be there in the background keeping your community, your team, and children safe from harmful content.

With Safer on the AWS marketplace, thousands of companies now have flexible, easy access to best-in-class technology to proactively detect and remove CSAM from their platforms without having to build it themselves. It integrates directly with your internal systems and runs 24/7, proactively keeping your community safe, fighting against the spread of CSAM and delivering justice for survivors.

Join us in building a better internet — the one we all deserve.