Deepfake technology is evolving at an alarming rate, lowering the barrier for bad actors to create hyper-realistic explicit images in seconds—with no technical expertise required.

For trust and safety teams, this presents an urgent challenge: AI-generated deepfake nudes are accelerating the spread of nonconsensual image abuse, reshaping how young people experience online harm and creating new areas of safety risk to mitigate.

At Thorn, we know that trust and safety teams are among the most important and influential players on the frontlines of preventing child sexual abuse and exploitation. Our latest research, Deepfake Nudes & Young People: A New Frontier in Technology-Facilitated Nonconsensual Sexual Abuse and Exploitation, provides crucial insights into how young people encounter and understand this issue—so platforms can take action now, before deepfake abuse becomes further entrenched in online culture.

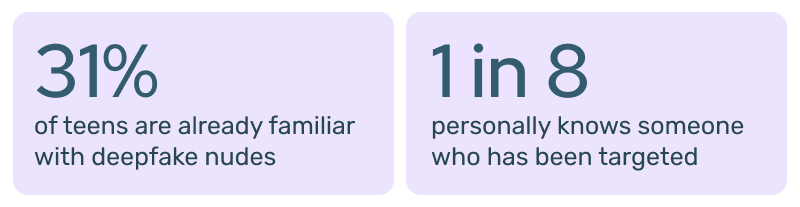

The study reveals that 31% of teens are already familiar with deepfake nudes, and 1 in 8 personally knows someone who has been targeted.

As deepfake technology grows more accessible, we have a critical window of opportunity to understand and combat this devastating form of digital exploitation—before it becomes normalized in young people's lives.

The emerging prevalence of deepfake nudes

The study, which surveyed 1,200 young people (ages 13-20), found that deepfake nudes already represent real experiences that young people are having to navigate.

What young people told us about deepfake nudes:

- 1 in 17 teens reported they had deepfake nudes created of them by someone else (i.e., have been the victim of deepfake nudes).

- 84% of teens believe deepfake nudes are harmful, citing emotional distress (30%), reputational damage (29%), and deception (26%) as top reasons.

- Misconceptions persist. While most recognize the harm, 16% of teens still believe these images are “not real” and, therefore, not a serious issue.

- The tools are alarmingly easy to access. Among the 2% of young people who admitted to creating deepfake nudes, most learned about the tools through app stores, search engines, and social media platforms.

- Victims often stay silent. Nearly two-thirds (62%) of young people say they would tell a parent if it happened to them—but in reality, only 34% of victims did.

Why this matters

As our VP of Research and Insights, Melissa Stroebel, put it:

“No child should wake up to find their face attached to an explicit image circulating online—but for too many young people, this is now a reality.”

This research underscores the critical role that tech companies, platform integrity teams, and AI developers play in mitigating this form of non-consensual intimate image abuse. The responsibility goes beyond detecting deepfake content—it requires a proactive approach to abuse prevention, product design, and policy enforcement.

What trust and safety teams can do

The spread of deepfake nudes underscores the urgent need for platforms to take responsibility in designing safer digital spaces. Trust and safety teams throughout the ecosystem have a role in defending against exploitative and abusive deepfakes. Platforms should:

- Adopt a Safety by Design approach to detect and prevent deepfake image creation and distribution before harm occurs. This can immediately reduce the misuse of generative AI technologies, set a precedent for responsible innovation, and build momentum for long-term regulatory frameworks.

- Commit to transparency and accountability by sharing how they address emerging threats like deepfake nudes and implementing solutions that prioritize child safety.

- Build a business case for product safety. Limiting the accessibility of tools designed or misused for abuse is not about restricting innovation but ensuring these technologies are developed, deployed, and used responsibly. This can have a profound impact on brand reputation and strengthen a business case for mitigating these risks.

Without these interventions, the spread of deepfake nudes will continue to outpace efforts to control the misuse of generative AI for these purposes, further normalizing the creation and distribution of nonconsensual content at scale.