As generative AI models reach unprecedented scale and deployment, engineering challenges have evolved beyond efficient training. Today's focus is on integrating GenAI functionality across consumer platforms, overcoming adoption barriers, and putting AI-powered tools into the hands of more users.

Yet with this focus on rapid adoption comes an uncomfortable reality: the same capabilities that drive innovation also create unprecedented opportunities for harm. Bad actors are exploiting generative AI to create realistic deepfake nudes, generate child sexual abuse material, and scale harassment with alarming ease. For companies pushing generative AI technologies, the stakes couldn't be higher.

The traditional approach of "build first, address safety later" simply doesn't work in this environment. When your technology can be misused to harm children at scale, reactive fixes aren't enough. The solution lies in a fundamentally different approach: Safety by Design. These responsible AI design principles embed child protection into every stage of AI model development, deployment, and maintenance.

AIG-CSAM and deepfake abuse

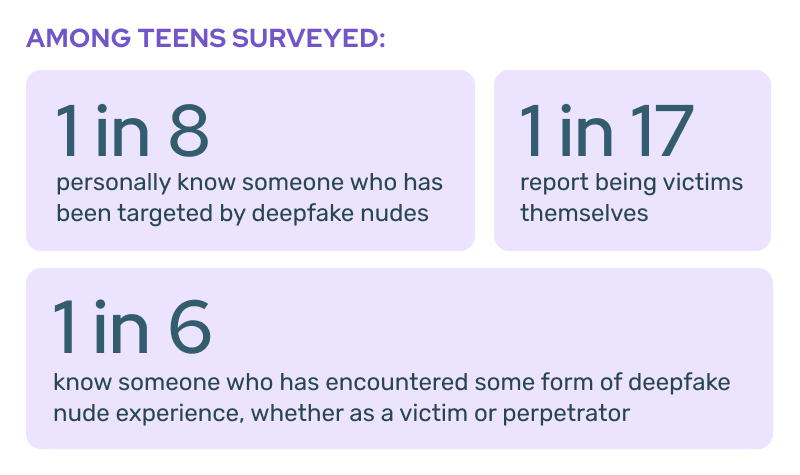

Recent research from Thorn reveals the reality of how quickly AI-generated abuse has infiltrated young people's lives. Among teens surveyed, 1 in 8 personally know someone who has been targeted by deepfake nudes, while 1 in 17 report being victims themselves. Perhaps most revealing: 1 in 6 teens know someone who has encountered some form of deepfake nude experience, whether as a victim or perpetrator.

The ease of access to AI tools is part of the challenge. Among young people who admit to creating deepfake nudes of others, 70% downloaded the apps they used directly from their device's app store. These aren't sophisticated hackers—they're children using mainstream tools to create harmful content with minimal effort or technical knowledge.

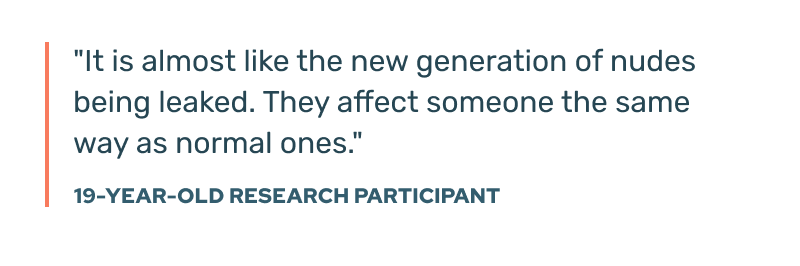

What makes this particularly urgent is the normalization effect. The technology is rapidly becoming embedded in youth culture, with 84% of young people recognizing that deepfake nudes cause real harm to victims. As one 19-year-old research participant noted: "It is almost like the new generation of nudes being leaked. They affect someone the same way as normal ones."

For generative AI companies, this represents a critical inflection point. The window for proactive intervention is narrowing as these technologies become more accessible and their misuse more normalized. This shift toward responsible AI development isn't just about compliance—it's about ethical considerations in AI development that protect vulnerable populations while still fostering innovation.

The cost of inaction—measured in legal exposure, reputational damage, and human harm—far exceeds the investment required for proactive safety measures.

The cost of reactive safety approaches for generative AI

For decades, the technology industry has operated under a familiar playbook: build fast, break things, iterate quickly, and address problems as they emerge. This “innovator’s approach” might be reasonable if the stakes are low—when a bug means inconvenience rather than irreversible harm.

Generative AI fundamentally breaks this paradigm. Unlike traditional software failures that can be patched or rolled back, AI-generated child sexual abuse material can't be "un-created." Once harmful content enters the digital ecosystem, it spreads with speed across platforms, often extending beyond the reach of takedown requests. The scale at which generative AI operates—enabling the creation of thousands of harmful images in minutes—means that by the time companies react to misuse, significant damage has already been done.

The business implications extend far beyond moral considerations. Legal compliance requirements are tightening across the globe, with platforms facing significant liability for hosting AI-generated child sexual abuse material. Reputational damage from association with such content can be swift and devastating, particularly as public scrutiny of AI safety intensifies. Regulatory pressure is mounting, with governments worldwide developing responsible AI frameworks that specifically target harmful content. The EU's AI Act, for instance, mandates risk assessments and safety measures before deployment, not after harm occurs.

Market dynamics are accelerating this shift. Industry leaders, such as those partnering with Thorn on generative AI Safety by Design, have already begun implementing comprehensive responsible AI guidelines. They recognize that ethical AI development provides a competitive advantage rather than a constraint. Early adopters are setting new industry standards, making reactive approaches not just risky, but increasingly obsolete.

Safety by Design: Proactive AI principles

Safety by Design represents a fundamental shift from reactive problem-solving to proactive prevention. Rather than waiting for harmful content to emerge and then scrambling to address it, this approach embeds child safety considerations into every decision point of AI model development. It's the difference between installing security measures after a break-in versus building a secure system from the beginning.

At its core, Safety by Design recognizes that generative AI systems present unique opportunities to prioritize child protection throughout their entire lifecycle. This responsible AI framework operates across three critical stages: development, deployment, and maintenance.

Development Stage: Building safety in

The development phase focuses on ensuring your training data and model architecture actively prevent harmful outputs. Key considerations include removing child sexual abuse material from training datasets using purpose-built detection solutions, implementing model biases against child exploitation content, and conducting comprehensive safety testing, including red teaming sessions specifically focused on child safety scenarios. This stage also involves establishing clear guidelines for training data and documenting child safety protocols to guide your team's decisions.

Deployment Stage: Active protection

Once your model goes live, Safety by Design principles shift to real-time protection. This includes monitoring input prompts for attempts to generate harmful content, implementing output scanning for child sexual abuse material, and deploying content filtering systems to prevent such content from being generated. User agreements must explicitly require child safety compliance, while technical measures, such as watermarking and provenance systems, help track and authenticate content. Prevention messaging and deterrence notices can also redirect users away from harmful requests.

Maintenance Stage: Continuous vigilance

Responsible AI development doesn't end at launch. The maintenance phase involves ongoing assessment of legacy models for safety gaps, regular updates to detection systems, and continuous monitoring of emerging threat patterns. This includes partnering with safety organizations, maintaining clear channels for reporting violations, and conducting regular safety audits to ensure your systems evolve in response to new challenges.

Why this framework is required

The power of Safety by Design lies in its proactive nature. Traditional approaches focus on detection and removal after harmful content already exists—a reactive stance that's always one step behind bad actors. Safety by Design prevents harmful content from being created in the first place.

Built-in safety measures are more effective than bolt-on solutions. When child protection is embedded in your model's architecture and training process, it becomes an integral part of how the system operates rather than an external filter that bad actors can circumvent. This approach provides stronger protection and reduces the ongoing operational burden of content moderation.

For generative AI companies, implementing Safety by Design principles isn't just about risk mitigation—it's about building sustainable, ethical AI systems that can scale responsibly while maintaining public trust and regulatory compliance.

Generative AI leadership and partnership

Implementing Safety by Design principles requires more than good intentions—it demands expert guidance and collaboration within the industry. Thorn has been at the forefront of this movement, convening leading generative AI companies, including Google, OpenAI, Meta, Stability AI, and others.

This collaborative approach has extended beyond the private sector. Thorn has worked closely with NIST and IEEE to transform Safety by Design principles into actionable industry standards. These ongoing partnerships demonstrate that responsible AI development is fast becoming the foundation for sustainable AI innovation.

Thorn has worked closely with NIST to inform their existing efforts in establishing comprehensive industry standards to reduce risks related to generative AI and synthetic media, ensuring these standards reflect our industry-leading Safety by Design principles and mitigations.

Thorn has driven efforts to establish and create an IEEE recommended practice that builds off of our Safety by Design principles and mitigations, ensuring that the perspective of the global scientific community is reflected via IEEE's position as an internationally respected standard setting institution.

The complexity of child safety in AI systems means no single company can solve these challenges alone. Shared standards and expert guidance help ensure that safety measures are both effective and practical to implement. When industry leaders work together, they establish norms that protect children while fostering the responsible growth of generative AI technologies.

The companies pioneering these approaches today are setting the standards that will define the industry tomorrow.

Take the next step with Safety by Design

The window for proactive intervention is closing. As deepfake technologies become more accessible and their misuse more normalized, the cost of reactive approaches will only increase. Companies that act now to implement comprehensive Safety by Design principles will protect vulnerable populations and position themselves as leaders in an increasingly regulated market.

Ready to get started? Download our comprehensive Safety by Design checklist—a practical guide that walks you through child safety considerations across the entire AI lifecycle, from development through maintenance. Take the time to work with your team and translate these principles into practical safety measures. If you need support, our team is here to help.