The National Center for Missing and Exploited Children recently released its 2023 CyberTipline report, which captures key trends from reports submitted by the public and digital platforms on suspected child sexual abuse material and exploitation. At Thorn, these insights are crucial to our efforts to defend children from sexual abuse and the innovative technologies we build to do so.

This year, two clear themes emerged from the report: Child sexual abuse remains a fundamentally human issue — one that technology is making significantly worse. And, leading-edge technologies must be part of the solution to this crisis in our digital age.

The scale of child sexual abuse continues to grow

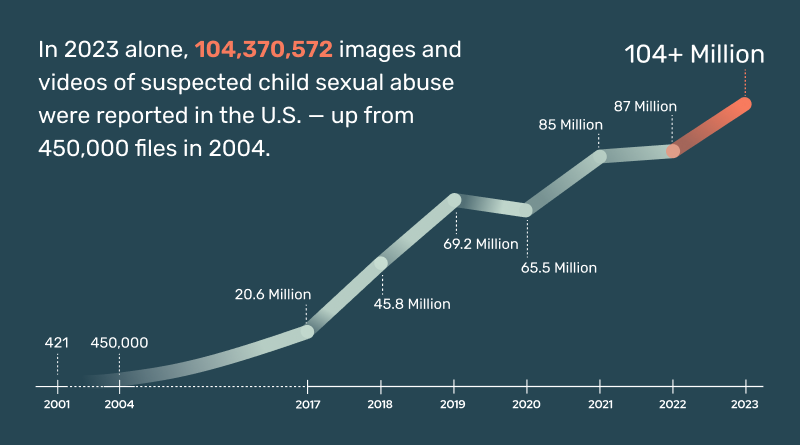

In 2023, NCMEC’s CyberTipline received a staggering 36.2 million reports of suspected child sexual exploitation. These reports included more than 104 million files of suspected child sexual abuse material (CSAM) — photos and videos that document the sexual abuse of a child. These numbers should give us immediate pause because they represent real children enduring horrific abuse. They underscore the urgent need for a comprehensive response.

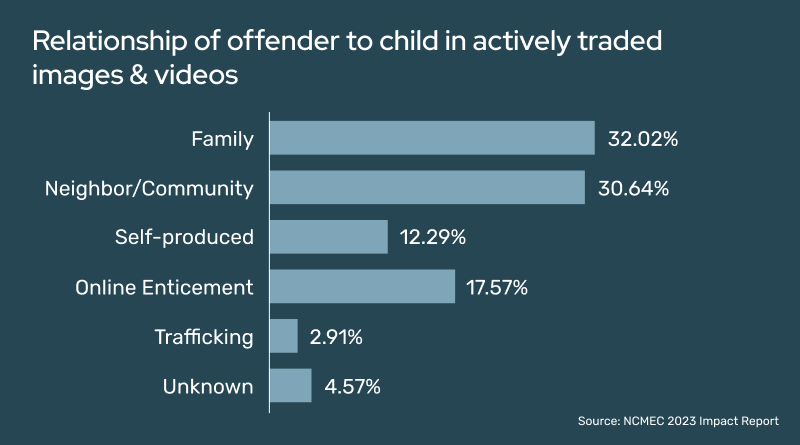

NCMEC’s Impact Report, which was released earlier this year, shed light on the creators of much of this CSAM, including files that NCMEC’s Child Victim Identification Program was already aware of. Sadly, for nearly 60% of the children already identified in distributed CSAM, the abusers were people with whom kids should be safe: Family members and trusted adults within the community (such as teachers, coaches, and neighbors) are most likely to be behind CSAM actively circulating on the internet today. These adults exploit their relationships to groom and abuse children in their care. They then share, trade, or sell the documentation of that abuse in online communities, and the spread of that material revictimizes the child again and again.

Of course, the astounding scale of child sexual abuse and exploitation is larger than these submitted reports. And modern technologies are responsible for accelerating predators’ abilities to commit sexual harms against children.

This means, we must meet scale with scale — in part by building and employing advanced technologies to speed up the fight against child sexual abuse from every angle.

Technology must be part of the solution

The efficiency and scalability of technology can create a powerful, positive force. Thorn has long recognized the advantage of AI and machine learning (ML) to identify CSAM. Our Safer Predict solution uses state-of-the-art predictive AI to detect unknown CSAM circulating the internet as well as new CSAM files being uploaded all the time. It can now also detect potentially harmful conversations — such as sextortion and solicitation of nudes — occurring between perpetrators and children, through our text classifier. These solutions provide vital tools needed to stop the spread of CSAM and abuse occurring online.

Additionally, the same AI/ML models that detect CSAM online also dramatically speed up law enforcement efforts in child sexual abuse investigations. As agents conduct forensic review of mountains of digital evidence, classifiers, like the one in Safer Predict, use AI models to automate CSAM detection, saving agents critical time. This helps law enforcement identify child victims faster so they can remove those kids from nightmare situations.

All across the child safety ecosystem, speed and scale matter. Cutting-edge technologies must be used to foil behaviors that encourage and incentivize abuse and pipelines for CSAM, while also enhancing prevention and mitigation strategies. Technology can expedite our understanding of emerging threats, support Trust & Safety teams in creating safer online environments, improve hotline response actions, and speed up victim identification.

In other words, deploying technology to all facets of the child safety ecosystem will be necessary if we’re to do anything more than bring a garden sprinkler to a forest fire.

Tech companies play a key role

From 2022 to 2023, reports of child sexual abuse and exploitation to NCMEC rose by over 4 million. While this increase correlates with rising abuse, it also means platforms are doing a better job detecting and reporting CSAM. This is a necessary part of the fight against online exploitation and we applaud the companies investing in protecting kids on their services.

Still, the reality is, only a small portion of digital platforms are submitting reports to NCMEC. Every content-hosting platform must recognize the role it plays in the child safety ecosystem and the opportunity it has to protect children and its community of users.

When platforms detect CSAM and child sexual exploitation and submit reports to NCMEC, that data can offer insights that can lead to child identification and removal from harm. That’s why it’s critical for platforms to submit high-quality reports with key user information that helps NCMEC to pinpoint the offense location or the appropriate law enforcement agency.

Additionally, the internet’s interconnectedness means abuse doesn't stay on one platform. Children and bad actors move across spaces fluidly — abuse can start on one platform and continue on another. CSAM, too, spreads virally. This means it’s imperative that tech companies collaborate in innovative ways to combat abuse as a united front.

A human issue made worse by emerging tech

Last year, NCMEC received 4,700 reports of CSAM or other sexually exploitative content related to generative AI. While that sounds like a lot — and it is — these reports represent a drop in the bucket compared to CSAM produced without AI.

The growing reports involving gen AI should, however, set off alarm bells. Just as the internet itself accelerated offline and online abuse, misuse of AI has the potential for profound threats to child safety. AI technologies make it easier than ever to create content at scale, including CSAM, and solicitation and grooming text. AI-generated CSAM ranges from AI adaptations of original abuse material to the sexualization of benign content to fully synthetic CSAM, often trained on CSAM involving real children.

In other words, it’s important to remember that child sexual abuse remains a fundamentally human issue, impacting real kids. Generative AI technologies in the wrong hands facilitate and accelerate this harm.

In the early days of the internet, reports of online abuse were relatively small. But from 2004 to 2022, they skyrocketed from 450,000 reports to 87 million, aided by digital tech. During that time, collectively, we failed to sufficiently address the ways technology could compound and accelerate child sexual abuse.

Today, we know far more about how child sexual abuse happens and the ways technology is misused to sexually harm children. We’re better positioned to rigorously assess the risks that generative AI and other emerging technologies pose to child safety. Each player in the tech industry has the responsibility to ensure children are protected as these applications are built. That’s why we collaborated with All Tech Is Human and leading generative AI companies to commit to principles to prevent the misuse of AI to further sexual harms against children.

Together, we can create a safer internet

From financial sextortion to online enticement of children for sexual acts, the 2023 NCMEC report captures the continued growth of disturbing trends against children. Bringing an end to child sexual abuse and exploitation will require a force of even greater might and scale — one that requires us to see technology as our ally.

That’s why Thorn continues to build leading-edge technology, expand partnerships across the child safety ecosystem, and bring more tech companies and lawmakers to the table to empower them to take action on this issue.

Child sexual abuse is a challenge we can solve. When the best and brightest minds wield powerful innovations and work together, we can build safer online communities for all.