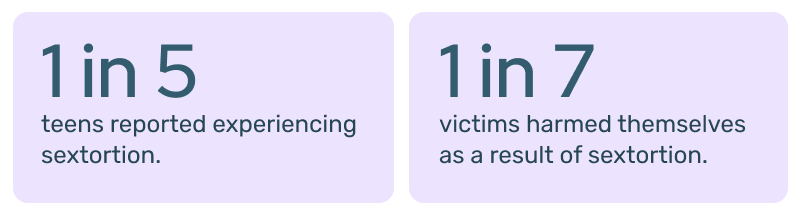

Sexual extortion, or ‘sextortion’, is evolving rapidly, presenting increasingly complex detection and prevention challenges for digital platforms. What was once considered a relatively rare form of abuse has become an urgent threat with devastating consequences that demand attention from trust and safety teams. Our new research reveals that 1 in 7 victims harmed themselves as a result of being sextorted. This isn't just a number—it represents real children experiencing real harm on platforms that trust and safety teams are tasked with protecting.

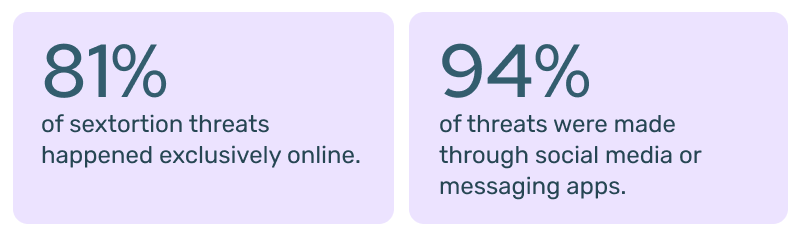

For safety professionals, this presents a major challenge: sextortion activity is almost exclusively funneled through online platforms, with 94% of threats made via digital forums, predominantly social media or direct messaging services. Current detection methods often miss crucial signals, particularly in relationship-based cases that may appear as normal communications until threats emerge.

At Thorn, we know these forms of exploitation are difficult to moderate. Our latest research, Sexual Extortion & Young People: Navigating Threats in Digital Environments, provides new insights based on surveys with 1,200 young people aged 13-20. The findings reveal patterns that can help platforms develop more effective detection and intervention strategies. This blog will highlight the key findings from the report, focusing on the implications for trust and safety professionals, and provide a thorough overview of sextortion in 2025.

The current landscape of sextortion

While financial sextortion has received significant media attention, our research reveals a much broader spectrum of exploitation that platforms must address:

Relational sextortion: When someone the victim feels they know—often a dating partner, friend, or even family member—uses intimate images to control or manipulate them. The demands usually require the victim to remain in or return to a relationship with the perpetrator.

Exploitative content sextortion: Demands the victim share more intimate photos, videos, or other material.

Financial sextortion: The extortionist demands money to prevent images from being shared, often perpetrated by organized criminal networks that frequently target young men.

Sadistic sextortion: Recently on the rise, these demands include suffering or submission through violence, self-harm, or destruction. Often coordinated through organized, online groups.

The scale of this problem is substantial. One in five teens (20%) in our survey reported experiencing sextortion. For trust and safety teams, this means platforms must design safety systems that address the full spectrum of sextortion types—not just the most visible financial cases that have recently dominated headlines. Detection systems that focus solely on financial transactions or explicit threats often miss the more nuanced offline relationship situations, which our research shows account for one out of three cases.

Who's at risk

Effective trust and safety interventions require understanding which users face the highest risk of sextortion. Our research reveals that sextortion occurs across diverse relationships and contexts, challenging common assumptions about who perpetrates this abuse and who experiences it.

While many safety features are designed to protect against threats from strangers, 1 in 3 victims (36%) knew their extortionist offline—often a romantic partner, school peer, or even a family acquaintance. This presents a significant detection challenge, as communications between known contacts typically trigger fewer safety flags than interactions with new or unknown accounts.

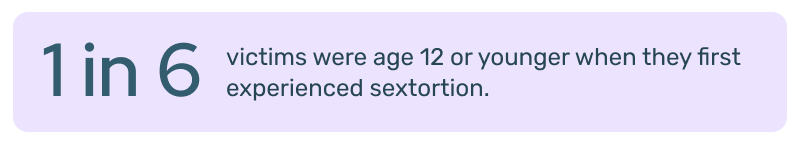

Age is another critical factor that should inform safety system design. Our research found that 1 in 6 victims were age 12 or younger when they first experienced sextortion. These younger children are particularly vulnerable to manipulation and less likely to recognize warning signs or know how to access help resources. This finding suggests that platforms serving younger users should implement more robust protection measures, particularly around private messaging and image-sharing functionalities.

The impact of sextortion and the way it unfolds can differ across groups:

- Boys and young men face different patterns of abuse, being disproportionately targeted for financial sextortion. Our research found that 36% of male victims reported financial demands, compared to lower rates among female victims. This suggests that platforms with payment or gaming currency systems should implement specific monitoring for gender-targeted financial sextortion attempts.

- By comparison, female (43%) and LGBTQ+ (44%) youth were considerably more likely to experience demands for more explicit imagery.

- LGBTQ+ youth experience significantly higher rates of self-harm following sextortion. While 10% of non-LGBTQ+ victims reported self-harm behaviors, that rate nearly triples to 28% among LGBTQ+ youth. This alarming difference highlights how existing vulnerabilities can compound the trauma of sextortion and should inform how platforms prioritize intervention resources.

These findings make clear that trust and safety teams should design safety systems with these vulnerabilities in mind, awareness of the different ways it could surface in the online experiences of young users, and appreciation of the spectrum of barriers victims may weigh when considering seeking support.

Creating a safer future

Despite the severe harm caused by sextortion, many victims never report their experiences or seek help. This presents a fundamental challenge for trust and safety teams, as traditional content moderation approaches rely heavily on user reporting to identify abusive interactions.

Several factors create reporting barriers that trust and safety teams must account for when designing safety systems:

- Shame and embarrassment often prevent victims from coming forward. Many feel they will be judged, blamed, or punished for having shared images in the first place, though our research found that 13% of victims reported that their extortionist used an AI-generated deepfake nude. This creates an additional layer of violation and can make victims feel even more helpless.

- Fear of escalation keeps victims quiet. They worry that reporting might lead to the perpetrator carrying out their threats or making the situation worse. This creates a paradoxical situation where safety features designed to help victims may actually increase their perceived risk.

- Lack of awareness about available resources leaves many young people feeling they have nowhere to turn. Even when platforms provide reporting tools, victims may not understand how to use them effectively or what will happen after they report.

- Concern about losing access to devices or platforms if parents, guardians, or platforms discover the situation often prevents reporting. Young users may fear being deplatformed if they call attention to themselves as an underage user or that adults will respond by restricting their online activities rather than addressing the abuse itself.

More importantly, these barriers to reporting underscore the critical need for proactive detection systems that don't rely solely on victim reporting. Machine learning classifiers that identify linguistic patterns associated with sextortion, combined with image hashing technologies, can help platforms identify potential sextortion cases even when victims remain silent.

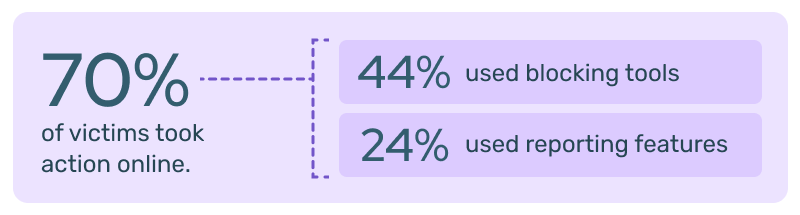

The data shows that platform safety features are already vital intervention points. Seventy percent of victims took action online, primarily through blocking tools (44%) and reporting features (24%). This high utilization rate demonstrates that these features serve as essential lifelines for young people in crisis. However, the fact that sextortion remains prevalent suggests that current safety measures may not be sufficient.

For trust and safety teams, several key considerations emerge from our research:

Enhance detection and reporting mechanisms

While most victims utilize available safety features, the effectiveness of these tools varies significantly. Reporting flows should be designed with the barriers we've identified in mind—reducing friction and stigma while providing clear information about what happens after a report is submitted.

Age-appropriate interventions are particularly important. Our data shows that younger victims respond differently than older teens, with younger users more likely to delete apps, report to platforms, and tell parents. Safety features should be tailored to these age-specific behaviors.

Address all forms of sextortion

While financial sextortion has received significant media attention, our research shows that relationship-based and image-based sextortion are equally or more prevalent. Trust and safety teams must design comprehensive safety systems that address the full spectrum of sextortion types.

Implement layered safety approaches

No single intervention can address the complex challenge of sextortion. Instead, platforms should implement layered safety approaches:

- User-level interventions to make platforms inhospitable to extortionists, including friction points in messaging between new connections and educational prompts at key moments.

- Proactive detection capabilities that don't rely solely on user reporting, particularly for identifying behavior patterns associated with sextortion before harm occurs.

- Collaborative signal sharing across the tech ecosystem, as perpetrators often operate across multiple platforms.

- Clearly accessible safety features across platforms, including messaging services, to ensure consistent protection regardless of which service a young person uses.

- Submitting actionable reports of sextortion with robust data to NCMEC, in compliance with the Report Act.

Our research shows different reporting patterns based on whether victims knew their extortionists offline or online-only, with offline connections leading to more offline reporting. This insight should inform how platforms design their safety systems, particularly for relationship-based sextortion cases.

By implementing these approaches, trust and safety teams can create digital environments where young people can explore, connect, and express themselves with significantly reduced risk of exploitation. The prevalence and impact of sextortion demand urgent action—but our research shows that with thoughtful, evidence-based interventions, platforms can make meaningful progress in protecting their youngest and most vulnerable users.