When millions of photos and videos are uploaded to your platform each day, the responsibility to ensure user safety is palpable.

Take it from Flickr, a Safer super-user that has prioritized detecting child sexual abuse material (CSAM) on its platform to protect its users.

The Challenge

Despite the massive volume of content uploaded to Flickr daily, the company’s Trust and Safety team has always been deeply committed to keeping its platform safe. The inherent challenge, however, is that working with incomplete or siloed hash sets makes CSAM detection difficult. Many images and videos uploaded to the platform have never been seen before or may have even been altered or computer generated.

Therefore, Flickr prioritizes the detection of new and previously unknown CSAM. To accomplish this, they require the use of artificial intelligence.

The Solution

In 2021, Flickr began using Safer’s Image Classifier – a machine learning classification model that predicts the likelihood that a file contains a child sexual abuse image.

Flickr’s Trust and Safety team incorporated the technology into their workflow to increase efficiency and to detect images that probably would not have been discovered otherwise.

The Results

It didn’t take long for Flickr to see the immense benefits of the Image Classifier implementation on its platform. One recent Classifier hit led to the discovery of 2,000 previously unknown images of CSAM. Once reported to NCMEC, an investigation by law enforcement was initiated.

Because Flickr is also an avid user of SaferList – a feature of Safer that allows participating platforms to contribute verified CSAM hashes to a database to be shared with other Safer users – another company was able to find CSAM on its own platform from the image set that led to the investigation.

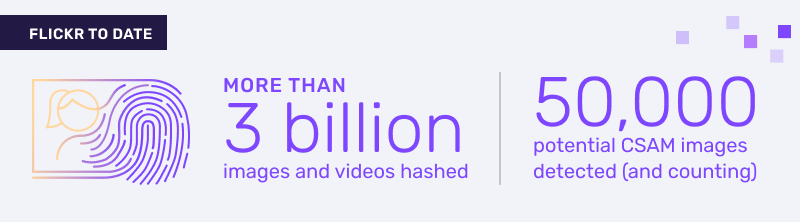

To date, Flickr – which has a team of less than 10 full-time Trust and Safety employees – has hashed more than 3 billion images and videos and detected 50,000 potential CSAM images (and counting).

“[Thorn’s Safer and its Classifier technology] takes a huge weight off the shoulders of our content moderators, who are able to proactively pursue and prioritize harmful content,” said Jace Pomales, Trust and Safety Manager at Flickr.

To learn more about how to put Safer to work on your platform, contact us.