The amount of and rate at which child sexual abuse material (CSAM) is found on the internet is escalating at unprecedented speed and scale and cannot be stopped solely with human intervention. In 2004, the National Center for Missing & Exploited Children (NCMEC) reviewed roughly 450,000 child sexual abuse material (CSAM) files. Fast forward to 2023, the NCMEC’s CyberTipline received over 104 million files of suspected CSAM. That’s an average of 2 million CSAM files reported per week.

Hosting this content is a potential risk for every platform that hosts user-generated content—be it a profile picture or expansive cloud storage space. In September 2019, The New York Times declared that “The Internet is Overrun With Images of Child Sexual Abuse.” Since then, the number of image and video files reported annually to NCMEC has grown, and new threats have emerged.

Thorn is committed to empowering the tech industry with tools and resources to disrupt CSAM at scale. Hashing and matching is one of the most important pieces of technology that you can deploy to help keep your users and your platform protected from the risks of hosting this content, while also helping to disrupt the viral spread of CSAM and the cycles of revictimization.

What is hashing and matching?

Hashing is Safer Match’s foundational technology. Safer Match uses perceptual and cryptographic hashing to convert a file into a unique string of numbers. This is called a hash value. It’s like a digital fingerprint for each piece of content.

Hashes are compared against Safer’s hash list that contains 82.3M+ hash values of previously reported and verified CSAM. The system looks for a match of the hash without ever seeing users’ content.

When and if a match is found, the file is queued for your team, to report to authorized entities who refer it to law enforcement in the proper jurisdiction. Safer offers a Reporting API to send reports directly to NCMEC or Royal Canadian Mounted Police (RCMP).

Only technology can tackle the scale of this issue

Millions of CSAM files are shared online every year. A large portion of these files are of previously reported and verified CSAM, and has been added to an NGO hash list. Hashing and matching is a programmatic way to disrupt thespread of child sexual abuse material.

Additionally, investigators and Trust and Safety teams can spend less time reviewing repeat content. This frees them up to prioritize high-risk content, where a child may be suffering active abuse. Learn more about how our CSAM classifier helps find new CSAM.

By using this privacy-forward technology to constrain CSAM at scale, we can protect individual privacy and advance the fight against CSAM.

Safer is helping our customers protect their platforms

A solution built for proactive known CSAM detection, Safer Match uses hashing and matching as the core part of its detection technology. With the largest database of verified CSAM hash values (82+ million hashes) to match against, Safer Match can cast a wide net to detect known CSAM.

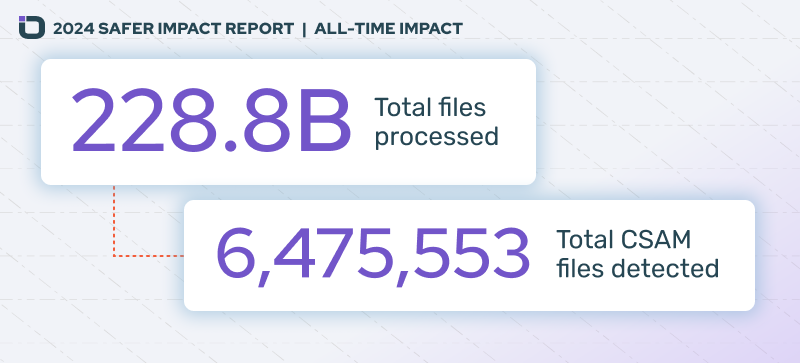

In 2024, we processed more than 112.3 billion images and videos for our customers. That empowered our customers to find 4,162,256 files of potential CSAM on their platforms. To date, Safer has matched more than 6.4 million files of potential CSAM.

Hashing and matching is crucial to protecting your users and your platform from the risks of hosting sexual abuse content. The more platforms that utilize this technology, the closer we will get to our goal of eliminating CSAM from the internet.