Post By: Safer / 4 min read

There has been a 329% increase in CSAM files reported to the National Center for Missing and Exploited Children (NCMEC)’s CyberTipline in the last five years. In 2022 alone, NCMEC received more than 88.3 million CSAM files.

Several factors may be contributing to the increase in reports:

- More platforms are deploying tools, such as Safer, to detect known CSAM using hashing and matching—which is why we believe more reports is not necessarily a bad thing.

- Online predators are more brazen and deploying novel technologies, such as chat bots, to scale their enticement. From 2021 to 2022, NCMEC saw an 82% increase in reports of online enticement of children for sexual acts.

- Self-generated CSAM (SG-CSAM) is on the rise. From 2021 to 2022 alone, the Internet Watch Foundation noted a 9% rise in SG-CSAM.

Addressing this issue requires scalable tools to detect both known and unknown CSAM.

Hashing and matching is the core of CSAM detection

Hashing and matching is the foundation of CSAM detection. But, it’s only a starting point because it only identifies known CSAM by matching against hashlists of previously reported and verified content. This is why the size of your CSAM hash database is critical.

Safer offers the largest database of known CSAM hashes–32+ million and growing. Our tool also enables our customers to share hashlists with each other, further expanding the corpus of known CSAM and casting a wide net for CSAM detection.

How do you find unknown or new CSAM, you may be asking?

That’s where machine learning classification models come into play. Safer’s CSAM classifier detects new and previously unknown CSAM — meaning material that already existed but wasn’t classified as CSAM, yet.

What is a classifier exactly?

Classifiers are algorithms that use machine learning to sort data into categories automatically.

How does Safer’s CSAM Classifier work?

Safer’s CSAM Classifier scans a file and assigns it a score that indicates the likelihood that the file contains a child sexual abuse image or video.

Our customers are able to set the threshold for the classifier score at which they want the potential CSAM flagged for human review. Once flagged for review and the moderator confirms if it is or is not CSAM, the classifier learns. It continually improves from this feedback loop so it can get even smarter at detecting novel CSAM.

Our all-in-one solution for CSAM detection, combines advanced AI technology with a self-hosted deployment to detect, review, and report CSAM at scale. In 2022, Safer’s CSAM Classifier made a significant impact for our customers, with 304,466 images classified as potential CSAM, and 15,238 videos classified as potential CSAM.

How does this technology help your trust and safety team?

Finding new and unknown CSAM often relies on user reports, which are most likely added to a growing backlog of content that needs human review. In addition to finding novel CSAM in newly uploaded content, a classifier can be leveraged in a variety of ways by trust and safety, such as scanning your backlog or historical files.

Utilizing this technology, can help keep your content moderators focused on the high-priority content that presents the greatest risk to your platform and users. To put it in perspective, you would need a team of hundreds of people with limitless hours to achieve what a CSAM Classifier can do through automation.

Flickr Case Study

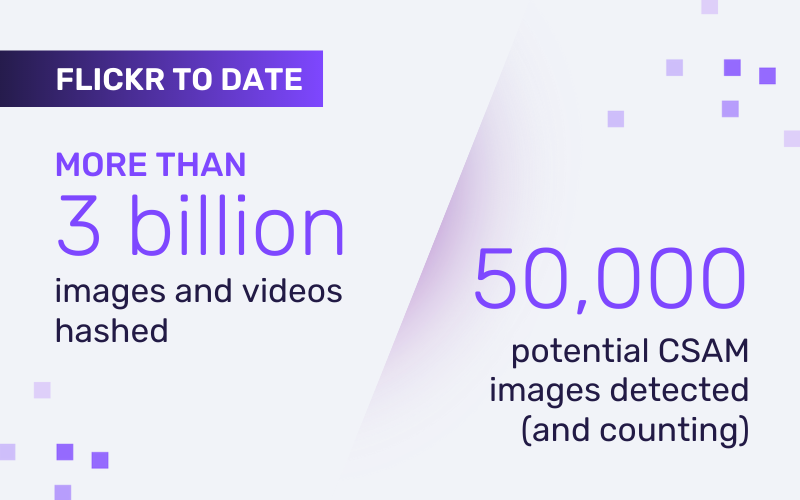

In fact, image and video hosting site Flickr uses Safer’s CSAM Classifier to find novel CSAM on their platform. Millions of photos and videos are uploaded to the platform each day, and the company’s Trust and Safety team has prioritized identifying new and previously unknown CSAM. The inherent challenge, however, is that hashing and matching only detects known CSAM. To accomplish this, they required the use of artificial intelligence. That’s where the CSAM Classifier comes in.

As Flickr’s Trust and Safety Manager, Jace Pomales, summarized it, “We don’t have a million bodies to throw at this problem, so having the right tooling is really important to us.”

One recent classifier hit led to the discovery of 2,000 previously unknown images of CSAM. Once reported to the NCMEC, law enforcement conducted an investigation, and a child was rescued from active abuse. That’s the power of this life-changing technology.

A Coordinated Appraoch is Key

To eliminate CSAM from the internet, we believe a focused and coordinated approach must be taken. Content-hosting platforms are key partners, and we’re committed to empowering the tech industry with tools and resources to combat child sexual abuse at scale. This is about safeguarding our children. It’s also about protecting your platform and your users. With the right tools, we can build a safer internet together.