Helping companies fight the spread of child sexual abuse material

Online sexual harms against children are on the rise and evolving. Deepfakes and large scale financial sextortion schemes now feel commonplace. At the core of these harms is the documentation of child sexual abuse—referred to as child sexual abuse material (CSAM).

CSAM was previously distributed through the mail. By the ‘90s, investigative strategies had nearly eliminated the trade of CSAM.

And then, the internet happened.

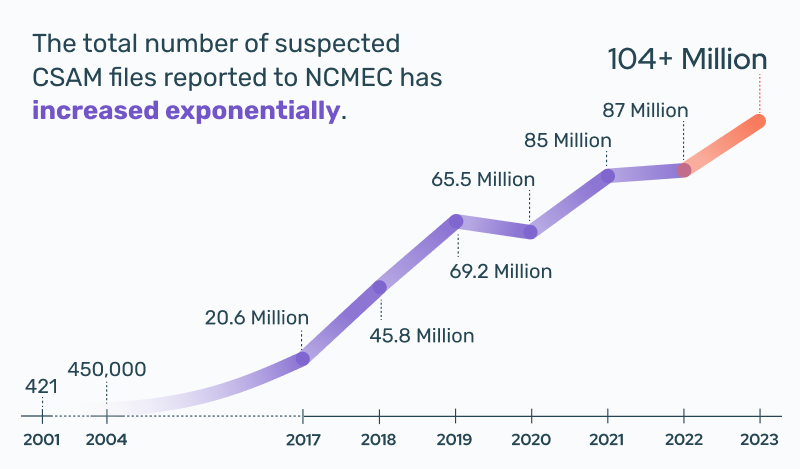

In 2004, the National Center for Missing and Exploited Children (NCMEC) received 450,000 files of suspected CSAM. In 2023, this number exceeded 104 million.

As the number of reports of child sexual abuse material being found on the internet continues to increase, it begs the question:

Because technology is contributing to its proliferation, what is technology’s role in mitigating the creation and distribution of CSAM?

“Eliminating child abuse content from the internet is only possible if every company - small, medium and large - is equipped to find it, report it to NCMEC and remove it. Our solution is purpose-built to detect online sexual harms against children, giving digital platforms the tools they need to take action. ”

— Julie Cordua, Thorn CEO

Thorn is committed to providing digital platforms with resources and solutions to understanding and mitigating the risks associated with CSAM. This 7-part series is an essential guide to understanding CSAM and the available detection strategies leveraged by trust and safety professionals.

CSAM Detection Strategies

The methods of detecting CSAM have evolved over the years and leverage a variety of technologies, from hash matching to classifiers. In this series, we explore the scale of the issue, risks to digital platforms and the solutions available to mitigate the risks of hosting CSAM.

What is CSAM? Common terms and definitions

Understand key terms and phrases.

Essential guide explaining CSAM terminology, detection technologies, and key organizations working to protect children online. Foundational knowledge for trust and safety professionals.

Online child sexual abuse and exploitation statistics

Understand the scale of the issue.

Disturbing statistics reveal how digital technology has accelerated child sexual abuse and exploitation. Learn critical trends in CSAM, online grooming, and emerging AI-based threats.

Know the signs and risks of hosting CSAM

Four questions to help assess your platform’s risk.

Learn common risk indicators that can help you assess your platform’s risk of hosting CSAM and why a proactive approach to detection is crucial for trust and safety.

Hash matching methods for CSAM detection

Understand the use case for available hashing methods.

Understand the multiple hashing methodologies used in CSAM detection. Perceptual and cryptographic hashes are each used for purposes.

CSAM Classifiers: Specialized detection methods

Understand the need for specialized CSAM detection.

Explore the complexities of CSAM detection, why multiple detection methods are needed, and how Thorn's expertise informs AI-based solutions.

Scene-sensitive video hashing for CSAM video detection

Understand how to detect CSAM within video content.

Discover Scene-Sensitive Video Hashing (SSVH) technology and how it can be incorporated into your existing workflows and help to detect CSAM within videos.

CSAM hash sharing across digital platforms

Learn about the available CSAM hash lists.

Learn about verified CSAM hash list sources from NCMEC, IWF, and Google, plus how Safer enables cross-platform hash sharing among its customers.

How to use this series

If you're new to trust and safety, this series is a great introduction to CSAM–from defining the various terms to understanding the technologies used to detect this content. Even if you’re a seasoned pro, you may find details about detection methodologies or recent issue research helpful. We've tried to make this series as concise and easy to understand, while also ensuring there’s something valuable for all audiences.

You can use the above table of contents to jump to the information that interests you most, or you can start at the beginning with: What is CSAM? Common terms and definitions.