Access to comprehensive and verified hash lists is crucial for trust and safety teams seeking to detect known CSAM on their platforms. This article explores the major sources of verified CSAM hash lists available to platforms today, including those from NCMEC, IWF, and Google, as well as Thorn's innovative approach to hash list aggregation and cross-platform sharing. We'll also discuss how Safer's self-managed hash lists can help close critical detection gaps and strengthen your platform's trust and safety operations.

Available verified CSAM hash lists

NCMEC

After an image or video is reported to NCMEC as containing CSAM, it is reviewed and confirmed at least three times by analysts before the hash value is added to a hash list. In 2023, NCMEC engaged Concentrix to conduct an independent audit of their hash lists. They found that 99.99% of the images and videos reviewed were verified as CSAM (met the U.S. federal legal definition of child porngraphy under 18 U.S.C. § 2256(8)).

IWF

The IWF hash list is updated daily and contains hash values that have been manually verified by analysts as CSAM. At least two people assess each child sexual abuse image or video independently before it’s added to the hash list. The hash list contains the following hash types: PhotoDNA, MD5, SHA-1 and SHA-256. Access to this hash list requires IWF membership.

Google offers an Industry Hash Sharing platform, which enables select companies to share their CSAM hashes with each other. Google is the largest contributor to this platform with approximately 74% of the total number of hashes on the list.

Aggregating hash lists and enabling cross-platform CSAM hash sharing

Detection of known CSAM grows more effective with each new hash value you can match against. To that end, Safer by Thorn has developed a CSAM hash database that aggregates available hash lists. Our aggregated database contains more than 76 million verified CSAM hash values. We also know the value of cross-platform hash sharing of novel CSAM hashes to help diminish the viral spread of CSAM. To that end, Safer provides the option for cross-platform hash sharing by our customers.

Safer’s self-managed hash lists and cross-platform sharing options

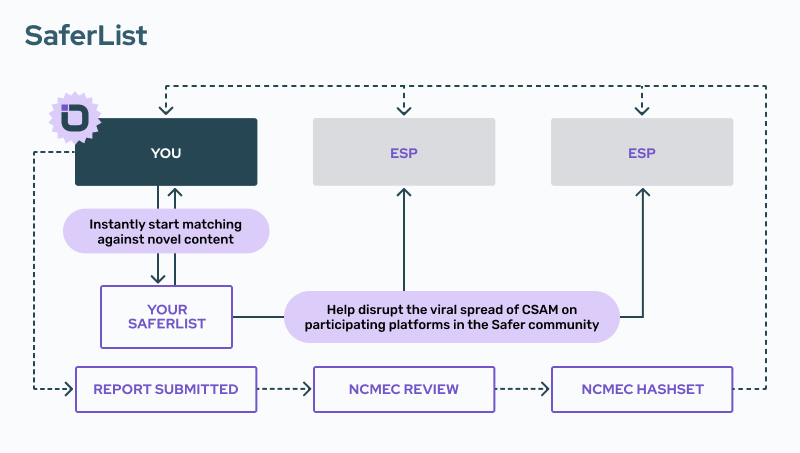

In addition to our matching service, which contains millions of hashes of verified child sexual abuse material (CSAM), Safer also provides self-managed hash lists that each customer can utilize to build internal hash lists (and even opt to share these lists with the Safer community). When you join Safer, we create a group of self-managed hash lists called SaferList.

Right from the start, your queries are set to match against these lists. All you have to do is add hashes to your lists. (Pro tip: If you’re using the Review Tool, it’s a simple button click to add hashes to SaferList.)

Each customer will have access to self-managed hash lists to address these content types:

- CSAM - Hashes of content your team has verified as novel CSAM.

- Sexually exploitative (SE) content - Content that may not meet the legal definition of CSAM but is nonetheless sexually exploitative of children.

There are unique benefits to using each of these lists. Additionally, every Safer customer can choose to share their list with the Safer community (either openly or anonymously).

Mitigate against re-uploads with your CSAM SaferList

With the established CSAM reporting process, there is a delay between when you submit novel CSAM to NCMEC (or other reporting entities) and when you begin to match against that content. That gap makes it possible for previously verified CSAM to be re-uploaded to your platform undetected. SaferList can help combat this.

When you report CSAM to NCMEC, the content goes through an important but lengthy review process to confirm that it is indeed CSAM. Only after that process is complete do they add the hash to their list of known CSAM. This means that programmatic hash matching won’t flag that content if it is uploaded again. In other words, your platform is vulnerable to that content until you can match against it. SaferList helps patch that vulnerability.

Use your SE SaferList to support enforcement of your platform’s policies

Different platforms will have different policies about sexually exploitative (SE) content. Your platform will likely define within your policies what constitutes SE content and how your platform intends to enforce policies related to that content.

Generally speaking, SE content is content that may not meet the legal definition of CSAM but is nonetheless sexually exploitative of children. Although not CSAM, the content may be associated with a known series of CSAM or otherwise used in a nefarious manner. This content should not be reported to NCMEC, but you will likely want to remove it from your platform.

Once identified, you can add SE content hashes to your SE SaferList to match against these hashes to detect other instances of this content and to help enforce your community guidelines.

The power of community

To eliminate CSAM from the web, we believe a focused and coordinated approach will be most effective. That’s why we created SaferList—so that platforms can share CSAM hash values. Every Safer customer can choose to share their list with the Safer community (either openly or anonymously).

You can also choose to match against SaferLists shared by other Safer users. The more platforms that use and share their SaferLists, the faster we break down data silos and the quicker we diminish the viral spread of CSAM on the open web.

Continue exploring this series:

Introduction

Begin here.

What is CSAM? Common terms and definitions

Understand key terms and phrases.

Online child sexual abuse and exploitation statistics

Understand the scale of the issue.

Know the signs and risks of hosting CSAM

4 questions to help assess your platform’s risk.

Hash matching methods for CSAM detection

Understand the use case for available hashing methods.

CSAM Classifiers: Specialized detection methods

Understand the need for specialized CSAM detection.

Scene-sensitive video hashing for CSAM video detection

Understand how to detect CSAM within video content.

CSAM hash sharing across digital platforms

Learn about the available CSAM hash lists.