Maximize efficiency and portability while maintaining data security

Safer is a secure and flexible suite of tools designed to support your company’s processes and scale your efforts to detect child sexual abuse material (CSAM) and text-based child sexual exploitation (CSE). Our modular platform consists of a series of services delivered to you through a self-hosted deployment.

With Safer running within your infrastructure, you can keep your data secure and maintain user privacy while accessing the tools you need to safely and efficiently handle CSAM and text-based CSE detection.

You control deployment

Safer’s services run on your infrastructure. You select which services you need and control how they integrate with your existing systems and workflows.

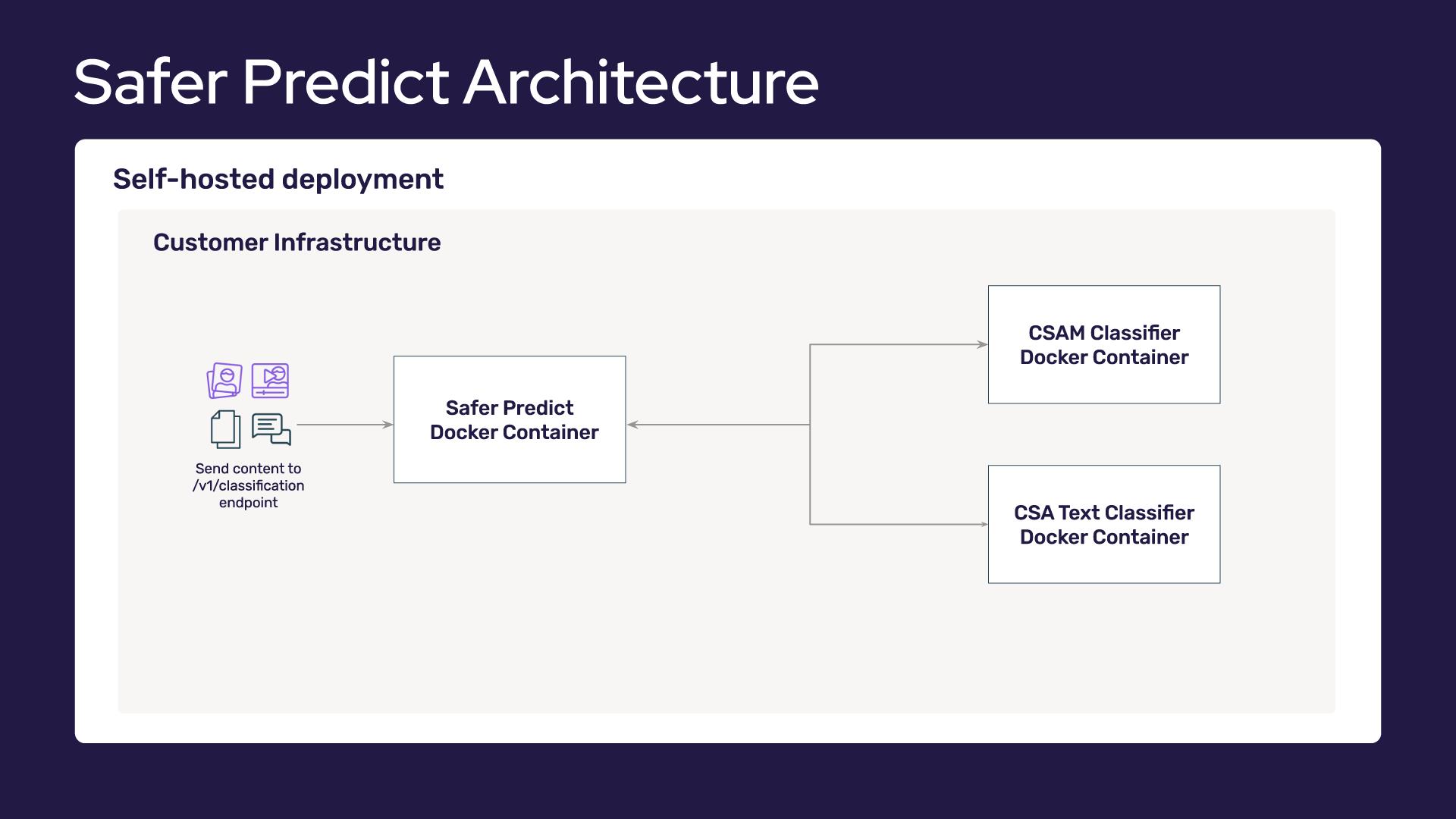

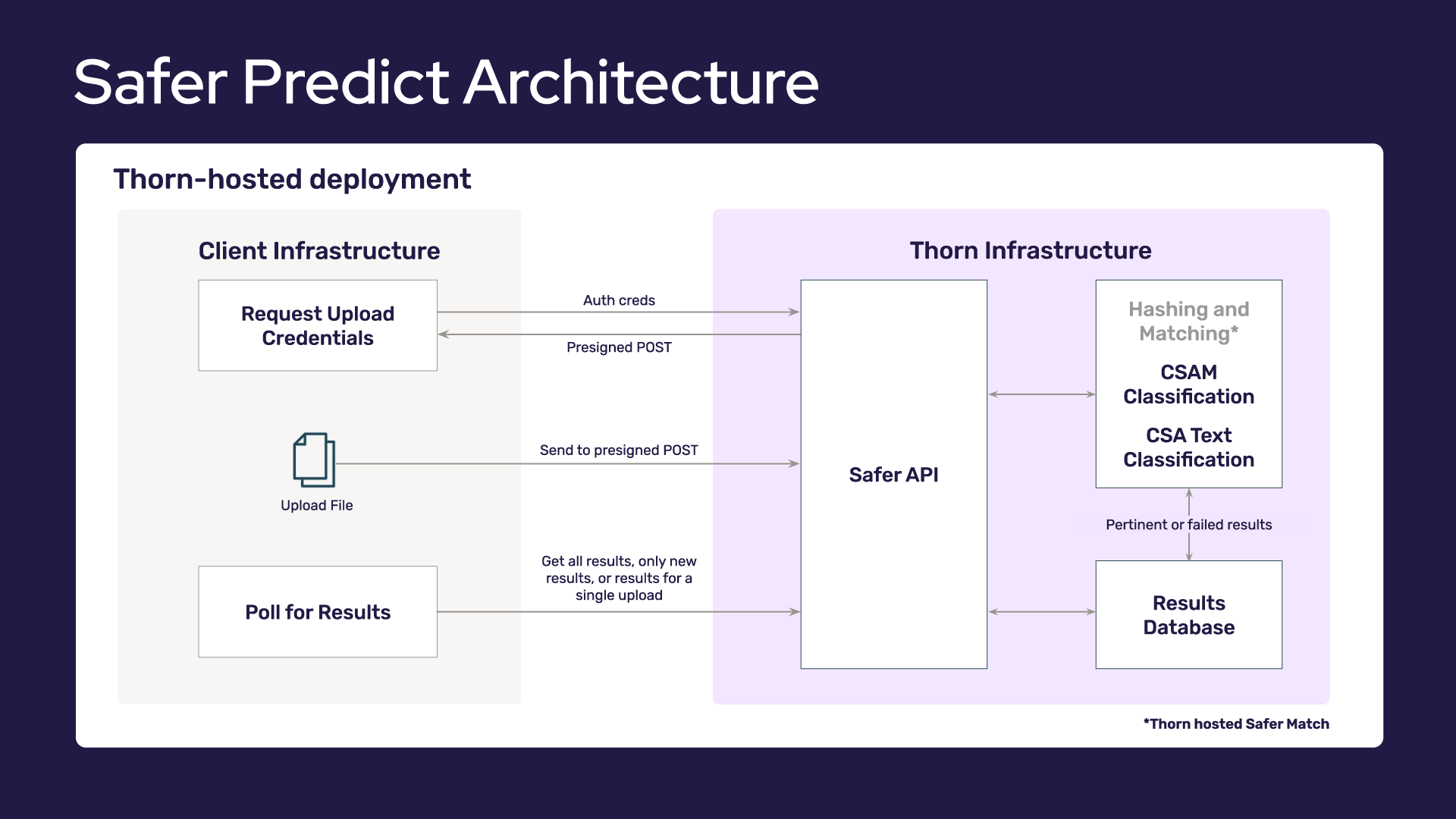

The diagram below illustrates Safer’s architecture and where it sits within your infrastructure.

The primary components of Safer are:

- Safer Match: Hashing Services

- Safer Predict: Classifiers powered by predictive AI and machine learning models

- Review Tool

- Reporting Service

With the exception of the Matching Service, all services are run within your infrastructure.

The flexibility to scale

Safer is modular and built to be flexible. Since the services are deployed as separate modules, it’s easy to mix and match different services and integrate them into your existing systems and workflows.

You have the flexibility to add services as your platform and needs change. Only need Hashing and Matching services? Or do you need an end-to-end solution? Safer can be tailored to your CSAM and text-based child sexual exploitation detection needs and grow with you as you scale.

Keep your data secure

With Safer, all data processing of files occurs entirely within your system. User content is hashed and classified within your environment. Only hashes of image and video files leave your system. Hashes are sent to the Matching service via API, at which time they are compared against known CSAM hashes. These hashes cannot be converted back into their original files—meaning the content and the user is not exposed during matching.

All content stays in your system until it is time to report. Content verified as CSAM by your Trust and Safety team only leaves your platform when you send reports to National Center for Missing and Exploited Children (NCMEC) or Royal Canadian Mounted Police (RCMP).

Protect your platform and your users

Thanks to Safer’s services having self-hosted deployment, you have the flexibility to select the services you need to help protect your platform and your users from abuse content. Safer is deployed within your infrastructure, giving you control over how the platform integrates with your current tech stack. With Safer, you can detect, review and report CSAM while keeping your data secure and maintaining user privacy.