In 2019, Thorn CEO Julie Cordua delivered a TED talk about eliminating child sexual abuse material from the internet. In that talk, she explained how hash sharing will be a critical tool in helping us achieve that goal. For the purposes of Thorn’s mission, these digital fingerprints can be used to detect whether an image matches a known CSAM image, without having to distribute the CSAM image itself. Cryptographic hashes (e.g., MD5, SHA-1), can be used to detect whether two files are exactly the same. Meanwhile, perceptual hashes (e.g., PhotoDNA) can be used to detect whether two files are similar. Today, lists of millions of cryptographic and perceptual hashes are available to help detect CSAM images quickly.

Despite all the advancements in technology that help tech companies identify abuse images faster, the work to eliminate CSAM is far from over. 2019 was the first year the number of videos exceeded the number of images reported by technology companies to the NCMEC CyberTipline, indicating the rising popularity of video among internet users also made its way to distributing videos of child abuse content.

According to Limelight Network’s State of Online Video 2019 report, internet users spent a weekly average of six hours and 48 minutes watching online videos - a staggering 59% increase from 2016.

It's clear that video isn't going anywhere.

When this content spreads, it results in the re-victimization of child sexual abuse survivors and victims. Video also poses an additional layer of complexity: when a video starts spreading on the internet, content reviewers are often in a position of having to re-watch the same videos (which take longer to review than images) over and over again.

While cryptographic hashing can be used to find and de-duplicate these videos, there have been very few perceptual hashing options to identify similar videos. This can cause problems accurately identifying CSAM because any change to a file, like the addition of metadata, will result in a completely different cryptographic hash, even if the files are otherwise identical.

At Thorn, we spent part of last year designing, testing, and deploying algorithms to reliably detect CSAM at scale in order to stop re-victimization and help mitigate continued exposure to traumatic content for content reviewers. Since then, we’ve deployed these algorithms within the tools we build for users and, based on their effectiveness, believe they are now ready for broader use.

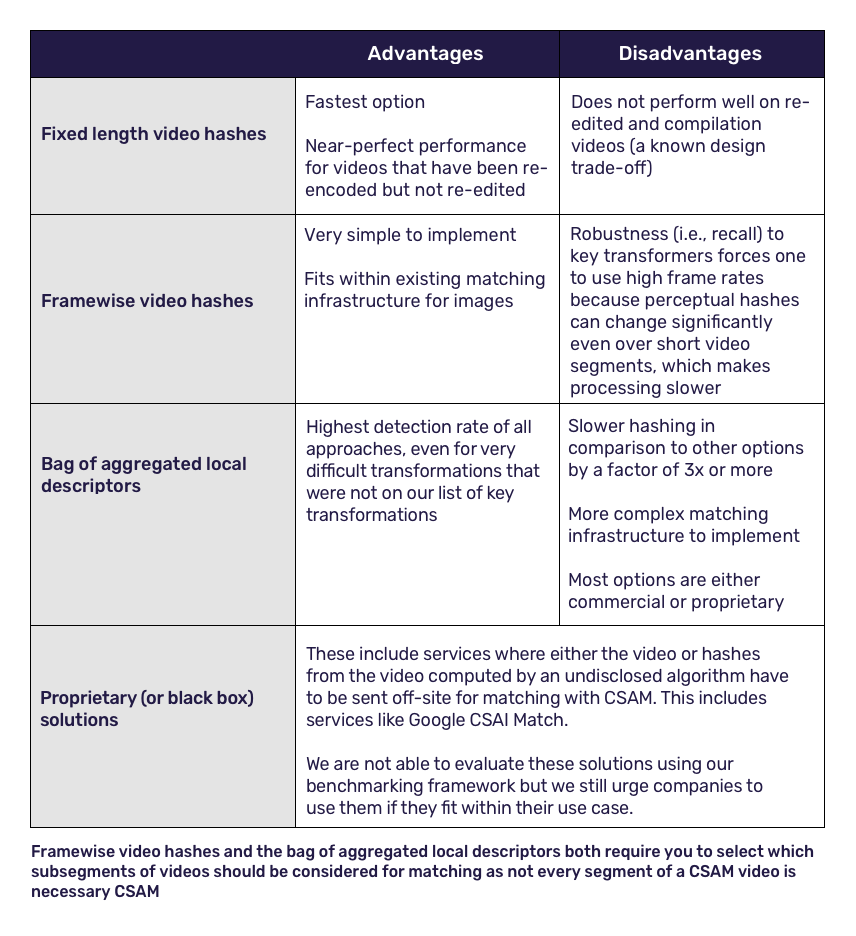

As a non-profit that also builds technology, we understand the technical challenges of effective perceptual hashing for video, one of which is the sheer number of ways to approach it, which include:

- Fixed length video hashes using global frame descriptors (e.g., TMK)

- Framewise video hashes (e.g., computing image hashes from videos at some regular interval)

- Bag of aggregated local descriptors (e.g., Videntifier)

- Proprietary solutions (e.g., Google CSAI Match)

In this blog, we share our thought process for evaluating the various methods, open a dialogue in the child safety community about how to tackle this problem, and build a community around growing our capabilities together.

Identifying our requirements

For each of the above, there are a number of adaptations one could make depending on the needs of a particular use case. At Thorn, we started with identifying high-level design criteria informed by our overarching goal: to eliminate CSAM from the internet. Those criteria were then translated to specific technical and functional requirements, as follows:

Requirement #1: Find as much CSAM as possible

- Ensure the perceptual hashing algorithm is robust to common transformations of CSAM videos

- Avoid disclosure of detection technology to offenders

Requirement #2: Remove or minimize barriers to adoption

- Minimize latency, subject to the constraints above. We set a soft target to hash 60 seconds of video in less than 1 second for 480p video

- Fit within existing hashing and matching infrastructure

- Maintain privacy by allowing fully on-premise use, with source code available to authorized users

- Freely available

Introducing Scene-Sensitive Video Hashing (SSVH)

With these requirements in mind, we built a benchmarking system that would allow us to easily compare different algorithms. Our approach is generally to measure recall (i.e., the fraction of video matched correctly) at different precision levels (e.g., 99.99% or 99.9%). In other words, we check how many true matches we can find if we only allow 0.01% or 0.1% of the matches to be incorrect. We use different levels because different use cases can tolerate higher rates of errors than others.

We evaluated the existing options on both technical and functional requirements and found the following advantages and disadvantages:

So we defined our technical and functional requirements, analyzed the different methods, and learned each method has its own advantages and disadvantages - so how do you decide which one to use? Sometimes, it’s not just one.

Because we were interested in a limited number of transformations while needing to be very fast, we opted for a compromise between fixed length video hashes (which aggregate global frame descriptors across a video) and framewise video hashes (which keep them apart) by splitting long videos into much shorter segments (or scenes). This allowed us to leverage the speed of the fixed length hash while retaining the ability to detect sub-segments of videos that might be compiled or edited. We call this approach Scene-Sensitive Video Hashing, or SSVH.

Selecting scenes for matching

This doesn’t yet address the question of how we separate non-CSAM segments of videos from CSAM. For over a year, Thorn has been working with partners in the child safety ecosystem to build a machine learning model for detecting CSAM in images. The model classifies images into three categories: “CSAM,” “pornography,” and “other.” To select scenes that will be retained for hashing, we classify the first frame in each scene to obtain an estimate of whether it is CSAM. If the estimate is very low, we drop the hash for that scene from the hash list. Otherwise, we retain the scene for matching.

We will talk more about our efforts to build this classifier in a future blog post. For now, it’s not necessary to do video detection because the classifications are only relevant when building the hash list to match against.

SSVH Performance

In bench tests, SSVH achieves > 95% recall at 99.9% precision for all of the transformations on our target list. When deployed to production in Safer, our industry solution helping content-hosting platforms quickly identify and action on CSAM, we have had no reported false positives (so far!). In addition, we’ve found that partners that start carrying out perceptual hashing for video end up getting their first flagged file within hours to days.

“We recently implemented the SSVH video hashing algorithm and have been consistently impressed with the technologies created by Thorn. It has been immensely rewarding for us to watch the Safer service flag a video after install and enable our Trust & Safety team to act quickly with this new found intelligence. Thorn has proven itself to be a very reliable and trustworthy partner in the fight to make our platform safer.”

— Alex Seville, Director of Engineering at FlickrSo far, less than 5% of the matches would have been found by MD5 matching alone.

We’ve also deployed SSVH to help partners de-duplicate their content review workflow to avoid re-exposure to identical content. In one case, this led to a 20% reduction in the review workload.

Sharing with the broader ecosystem

One of Thorn’s core objectives is to equip the tech industry with the tools to quickly identify and remove CSAM from their platforms. By proactively eliminating opportunities for bad actors to distribute child sexual abuse material, platforms are protecting their community of users, their employees, and the victims whose abuse is depicted in the content.

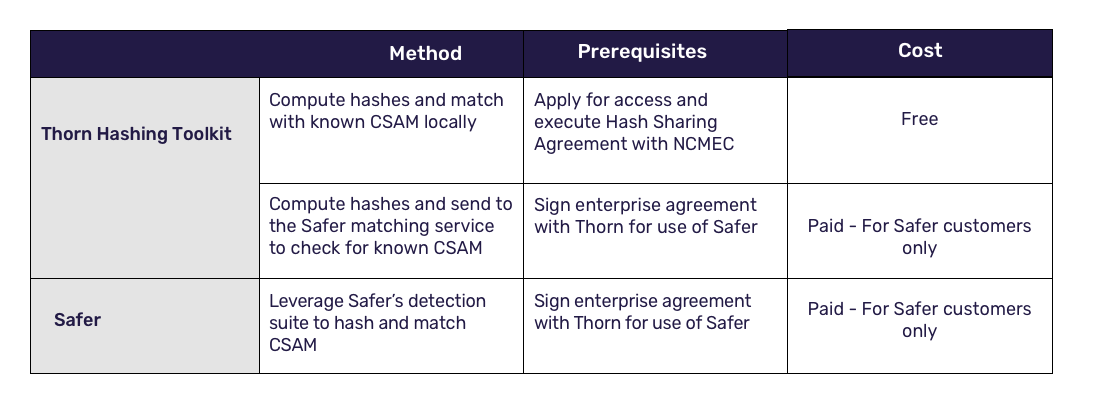

Thorn is making SSVH available for authorized users to further support platforms in the fight against CSAM. Companies can use SSVH to detect CSAM in one of the following ways:

The options to leverage Safer are available to Safer customers, which help us sustain our work for the longer term. However, the locally installed option for using the Thorn Hashing Toolkit is available for free for approved companies. Our aim is to provide tools such that every organization and company working to defend children from sexual abuse has what they need to be successful. We are providing this technology openly in the interest of collecting feedback and improving our shared practices together, until every child can just be a kid.

Fausto Morales works on computer vision projects at Thorn. Before Thorn, he worked on computer vision projects in the industrial sector including pipeline monitoring in the oil and gas industry, quality assurance in product packaging plants, and industrial diagram interpretation. He enjoys working on open source projects to make computer vision accessible to more people.