Post By: Safer / 4 min read

Thorn launched Safer in 2019 to empower content-hosting platforms to create safer spaces online by proactively detecting child sexual abuse material (CSAM) on their platforms. In 2023, more companies than ever deployed Safer. This shared dedication to child safety was extraordinary and showcases what’s possible when we work together to build a safer internet.

Though Safer customers span a range of industries, they all host user-generated content and have a desire to provide safe experiences to their users. Safer enables platforms to take a proactive approach to detecting CSAM at scale. Trust and safety teams rely on our comprehensive hash database and predictive artificial intelligence to help them find CSAM amid the millions of pieces of content uploaded to their platforms every day.

Safer’s 2023 feature updates

In 2023, Safer added a Thorn-hosted hashing and matching solution, a new account portal for customers, which offers self-service admin features and on-demand insights, and enhanced capabilities in the review module that expands reporting details.

Thorn-hosted deployment: At Thorn, we understand Trust and Safety teams are short on resources. That's why, this year we launched a Thorn-hosted hashing and matching solution that enables platforms to take the first step in proactive CSAM detection with minimal engineering resources. With this solution, you can quickly begin to protect your platform and users from harmful sexual abuse content and the risks of hosting CSAM.

Customer Admin Portal: We launched an admin portal to unlock the possibility of self-service Safer features. Last year, we enabled customers to manage their hash lists, share their CSAM hash list via SaferList to increase cross-platform intelligence and diminish the viral spread of CSAM, and contact support. And, now, customers can view their impact metrics from the past 12 months. Portal will continue to be the hub for all questions, data, and controls. Stay tuned for more updates.

Review Module: In Q4, two highly requested enhancements were launched in the review module: international addresses in reports and more robust filtering and search capabilities. Adding the ability to include international addresses of the Reported User will help moderators include additional detail in their reports submitted to National Center for Missing and Exploited Children and Royal Canadian Mounted Police. Enhanced filter, pagination, and search added to the reports list section will help improve the content moderators' workflow when managing their report queues.

Safer’s 2023 impact

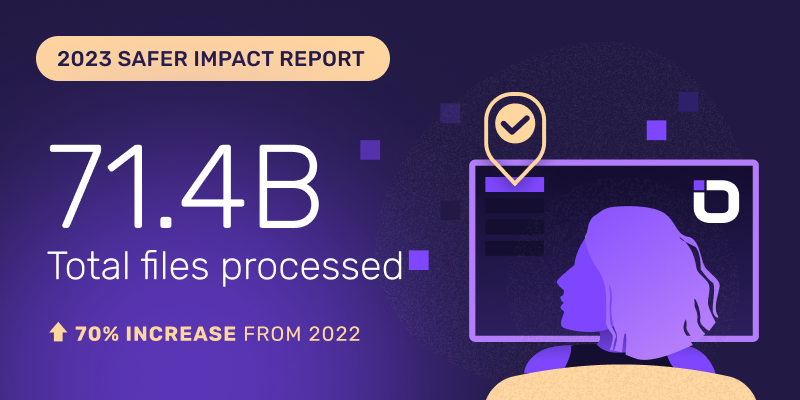

More files were processed through Safer in each of the last three months of 2023 than in 2019 and 2020 combined. These inspiring numbers indicate a shift in priorities for many companies as they dial-up their efforts to center the safety of children and their users.

In 2023, Safer processed 71.4 billion files input by our customers. This massive 70% increase over 2022 was propelled in part by the addition of 10 new Safer customers. Today, 50 platforms, with millions of users and vast amounts of content, comprise the Safer community, creating a significant and ever-growing force against CSAM online.

As our community grows, so too does the database of hashes available to Safer customers. With 57+ million hashes currently, Safer provides a wide net to detect CSAM. Our SaferList lets customers contribute hash lists, which means it’s always growing. Providing a means for our customers to share hash values, helps to mitigate re-reviews of the same content and empowers platforms to help stop the viral spread of CSAM.

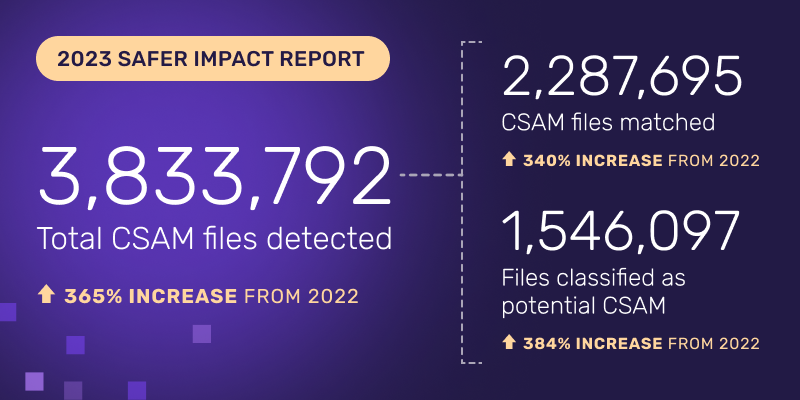

Our customers detected more than 2,000,000 images and videos of known CSAM in 2023. This means Safer matched the files’ hashes to verified hash values from trusted sources, identifying them as CSAM. A hash is like a digital fingerprint and using them allows Safer to programmatically determine if that file is previously verified CSAM, while avoiding unnecessary exposure of content moderators to harmful content.

In addition to detecting known CSAM, our predictive AI detected more than 1,500,000 files of potential unknown CSAM. Safer’s image and video classifiers use machine learning to predict whether new content is likely to be CSAM and flag it for further review.

Altogether, Safer detected more than 3,800,000 files of known or potential CSAM, a 365% increase in just a year, showing both the accelerating scale of the issue and the power of a unified fight against it.

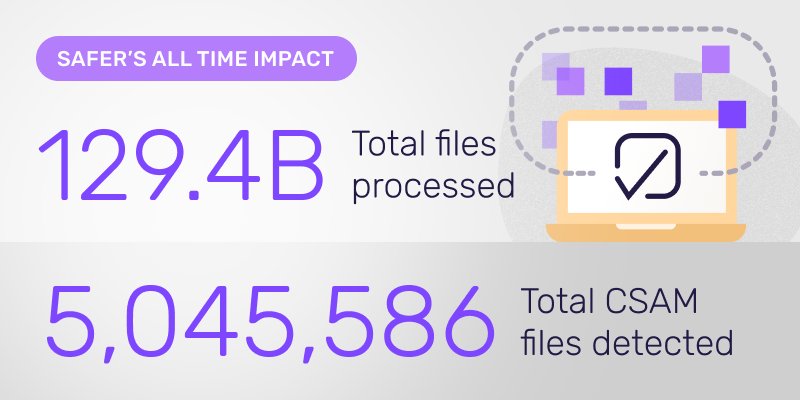

Last year continued to highlight the profound impact of a coordinated approach to eliminating CSAM online. Since 2019, Safer has processed 129.4 billion files from content-hosting platforms, and of those, detected 5 million CSAM files. Each year that we remain focused on this mission helps shape a safer internet for children, platforms, and their users.

Join our mission

Combating the spread of CSAM requires a united front. Unfortunately, efforts across the tech industry remain inconsistent and many tools rely on siloed data. Safer is here to help create change at scale.

Today, the internet is safer because more companies than ever chose to be proactive with industry-leading solutions, forward-thinking policies, and well-equipped teams to prioritize child safety on their platform.