Post By: Safer / 6 min read

Today, children are on every digital platform — and so too are those looking to do them harm.

The motivations of these bad actors can differ, but they all target community platforms where they know children spend their time. (1) After all, platform features — designed for the very purpose of fostering connections — make it easier than ever to interact with children.

At the same time, young people are far more comfortable these days with online-only friendships and relationships — making them especially vulnerable to those with ulterior motives.

This easy access to unsuspecting children has given rise to new types of child sexual perpetrators.

For trust and safety, understanding who may be lurking on your platform, what motivates them, and how they operate, helps you identify suspicious behavior and put mitigations in place to create a safer platform. Here, we look at some examples of the most prominent child sexual perpetrators identified in Thorn’s research.

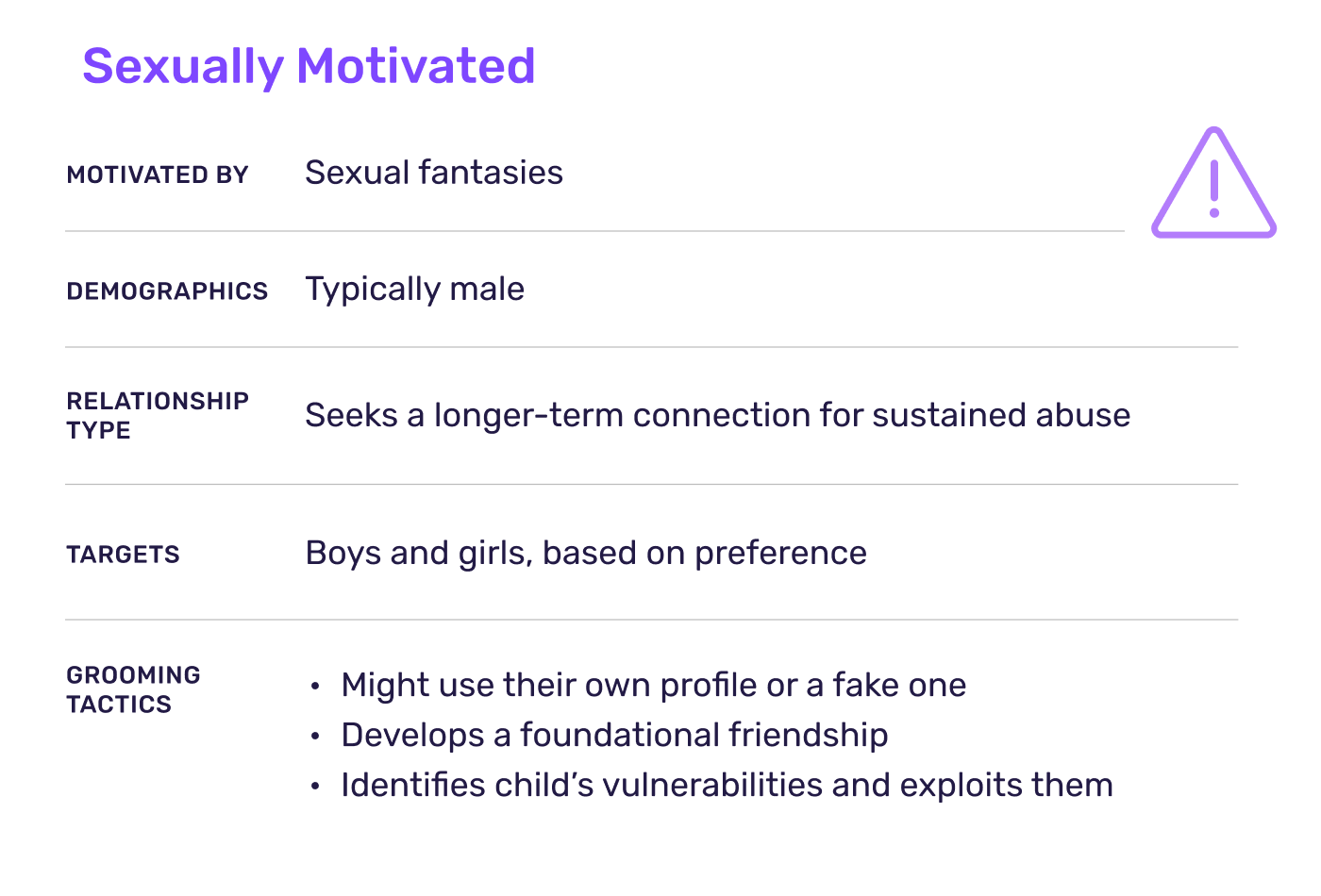

Sexually Motivated Bad Actor

When we think of adults targeting children online, we usually envision the predator who’s sexually motivated. Indeed these perpetrators predate the internet and in the digital age, some now use the internet to find new victims and fellow offenders.

These actors are typically men who are motivated by personal sexual fantasies. They join platforms with the goal of finding victims and/or obtaining child sexual abuse material (CSAM). Targeting young girls or boys based on their preference, they often seek a longer-term relationship with the child for sustained abuse.

Through their own profile or a fake one, these actors engage with the child and develop a foundational friendship. They’ll express shared interests, ask about the child’s life and check in often, building the child’s trust and affection over time. Eventually, often through flattery, they coerce the child into sending sexual content of themselves, typically self-produced by the child (called self-generated CSAM). Through manipulation — such as social and emotional guidance or pressure — they ensure the child stays in the abusive relationship.

Some offenders start by befriending parents, particularly single mothers, in an attempt to gain access to their young children or content of their children.

As they obtain CSAM, offenders often share it on content-hosting platforms, which revictimizes the child again and again. Entire online communities have built up around creating and trading CSAM. And as it becomes more widely available, repeated exposure may normalize behaviors that fuel this content’s creation, and can serve as a kind of on-ramp to more and more egregious material.

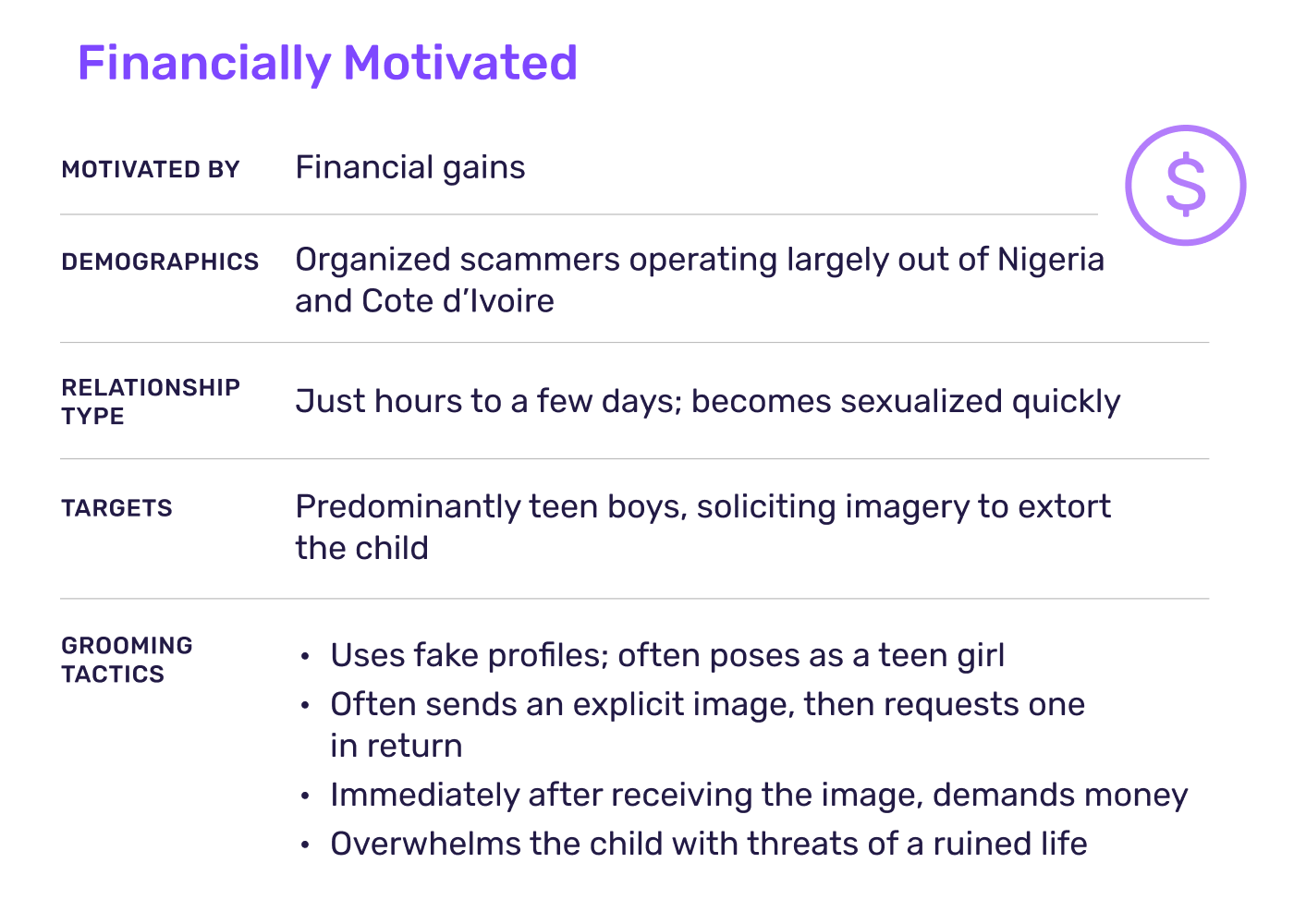

Financially Motivated Bad Actor

Financial sextortion — the act of extorting someone by threatening to expose their sexual imagery for monetary gain — is a disturbing scheme that’s rising at alarming rates.

The trend involves perpetrators posing as teenage girls and targeting boys aged 14-17 online. The scammers quickly build a deceptive relationship with the boy. Then, exploiting his emotional needs for connection and attraction, they’ll share intimate images with him and coerce him into reciprocating by sending sexual images of himself. (2) Once he does, they dramatically shift tone and threaten to expose his images if he doesn’t deliver financial payment. Terrorized with threats of a ruined life — such as “Once I send, you won't get into college, you'll be added to child sex offender list, your life is over” — the child is thrust into a traumatic experience of fear and shame.

The progression of threats is extremely rapid — often starting within hours of first contact — and designed to pressure the child to pay before he has time to process the threats or seek support. (3)

Typically part of organized crime groups operating predominantly out of Nigeria and Cote d’Ivoire, these scammers are known to act on their threats, exposing the images to friends and family. Sextortion has gained national attention for its severe and devastating consequences, including victim suicide.

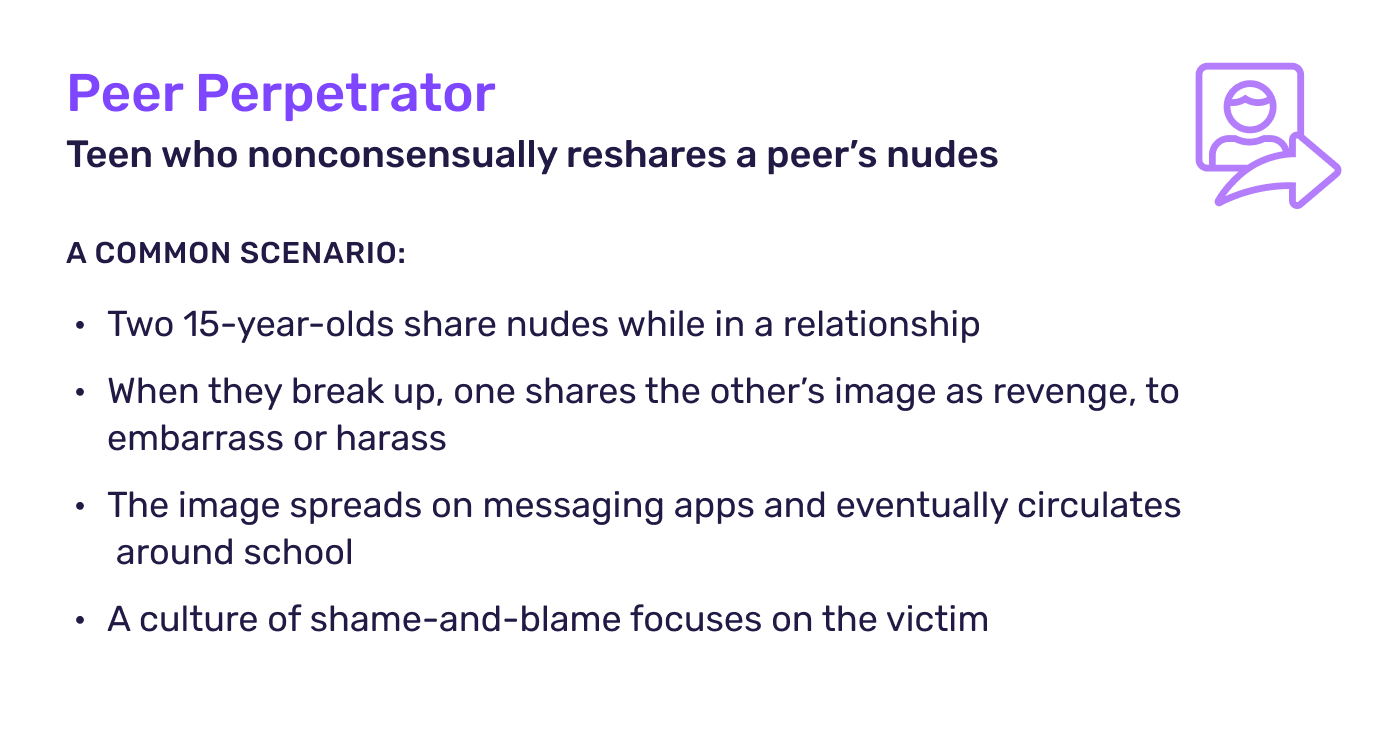

Peer Perpetrator

Unfortunately, it’s not just adults that pose a risk to children online. Today, the actions of other youth also threaten kids in digital spaces: When youth nonconsensually reshare peers’ nudes, they can cause severe harm, even if that harm is unintentional or not fully understood.

These scenarios often start in romantic relationships. For example, two 15-year-olds share nudes while they’re dating. When they break up, one reshares the other’s image without permission as revenge or to embarrass or harass. The explicit image spreads on messaging apps and eventually circulates around the school. Typically, a culture of victim-shaming that has shaped both teen and parent-child relationships directs all blame on the child who’s nude was leaked.

Self-Harm Extremist Groups

Another class of child sexual perpetrators is also starting to emerge: international violent extremists groups. The networks, which have targeted thousands of children already, pursue and sextort youth with mental health issues. They coerce the child into sending a nude photo, and then use the image to blackmail the child into commiting extreme acts on camera, such as horrific self-harm or cruelty to animals. The schemes serve as entertainment for members, who are driven to gain notoriety within their community. The groups predominantly convene on platforms with live streaming and group chats.

How Bad Actors Exploit Platform Features

As bad actors move through the process of searching out a victim, engaging the child, and eventually committing abuse, they exploit everyday platform features to do so. Here’s a look at common tactics used by perpetrators at each step along their path:

Searching out victims

Bad actors often search out victims on open platforms with large concentrations of users, especially those with open or public accounts.

Tactic:

Might use location-sharing features to find victims — looking through public photos tagged at local establishments, like schools or gyms, or where they think children may be.

Meeting and engaging the child

Bad actors can disguise themselves through many of the profile features used on platforms, as well as exploit those features to broaden their search.

Tactics:

- May use false misrepresentations of themselves by creating fake personas and accounts to mislead the child about their identity and to appear more trustworthy.

- Likely uses direct messaging to establish and build rapport with the child.

- May use multiple fake accounts to engage with the child and gain their trust.

- Accesses and uses that child’s follower list or network to identify additional child targets.

Isolating the child

Once they’ve established a friendship, perpetrators often move, or “push,” the child to a platform with enhanced privacy features or features perceived to be more amenable to abuse. For example, ephemeral messaging or encryption.

Tactic:

Suggests moving the conversation to a different platform because it’s “better” or “easier to chat.”

Abusing the child

Having built the child’s trust, the bad actor will coax or manipulate the child into abusive scenarios.

Tactic:

Uses content generation tools to share and receive images, videos, and audio of the abuse.

The proliferation of publicly available AI tools is only accelerating these tragic harms. Bad actors already employ everything from rapid-text generation to AI image- generating models to perpetuate grooming efforts, CSAM production, and sextortion threats, among other crimes.

So What can Platforms Do?

The first step is acknowledging that, today, children and bad actors exist on every platform. This reality makes it critical to review your platform features through the lens of these users, their behaviors, and their motives. Trust and Safety teams should review child safety policies, as well as on-platform reporting tools — which research shows kids greatly rely on to defend themselves — to ensure they’re designed to truly safeguard children.

Additionally, knowing what youth experience online can help you tailor your mitigations to serve their needs and properly protect them.

Together, we can build a safer internet and defend children from sexual abuse even as bad actors evolve in the ever-shifting digital world.

REFERENCES

(1) Suojellaan Lapsia, Protect Children ry. (2024). “Tech Platforms Used by Online Child Sexual Abuse Offenders: Research Report with Actionable

Recommendations for the Tech Industry”

(2) Federal Bureau of Investigation (FBI) Website. How We Can Help You. Financially Motivated Sextortion.

(3) Hoffman, C. (2023). FBI Warns of Predators Targeting Kids on Social Media. KDKA News