As trust and safety teams evolve, they begin to develop a set of signals and intelligence they use to assist them in identifying potential risks and harmful behaviors on their platforms. They gather information about user accounts and content details; they adopt proactive detection technologies. All of these tools can be layered to help identify harmful behavioral patterns, potential policy violations or areas of legal risk.

But what if your platform doesn’t have a well-established trust and safety team? How can you assess your risk of hosting CSAM?

There is some available data that can be used as potential indicators that your platform may be at risk. The criteria listed below does not represent an exhaustive list of CSAM risk indicators. Each platform’s level of risk and legal requirements will vary.

Start assessing your platform’s risk by considering the following questions:

1. Do you host user-generated content?

CSAM images and videos aren’t just a concern for social media, messaging, or file-sharing platforms. This material has been detected on all different types of sites. The one thing they all have in common: an upload button. That’s because user-generated content lives in various forms across the web, is hosted and shared in many different ways, and platforms often cannot control what content is uploaded to their servers or when users access it. As a result, a wide range of services are at risk, from newsgroups and bulletin boards to peer-to-peer networks, online gaming sites and more.

According to data from INHOPE’s 2023 annual report, website and image hosting sites remained the dominant categories used by bad actors to share and distribute CSAM content, but these types of sites are not exclusively where CSAM images and videos are found. Any platform that allows users to directly connect with one another is a potential host for CSAM, particularly when those features can be exploited by bad actors.

2. Have you received user reports about CSAM on your platform?

With CSAM, a single instance on your platform is most likely not an isolated case but rather an indication of a larger issue. Relying on user reports alone is an unreliable approach to CSAM mitigation. Your brand’s reputation and safety is too important to crowdsource its protection.

If data from reporting entities is any indication of the ratios between user reports vs. ESP reports, it’s clear that a proactive, scalable approach to detection is needed to mitigate your risks of hosting CSAM.

In 2023:

NCMEC

0.7% of CSAM reports submitted to CyberTipline were from the public.

IWF

34% of reports assessed were submitted via external sources (the public, law enforcement, etc.).

Think about what could happen in the time between CSAM being uploaded on your platform and when your team removes it. How long would that file be hosted and viewable by community members before it was discovered? Who was exposed to it but didn’t report it? Without proactive CSAM detection measures in place, your user experience and brand reputation is at risk.

3. Where is your domain hosted? Where are your physical servers located?

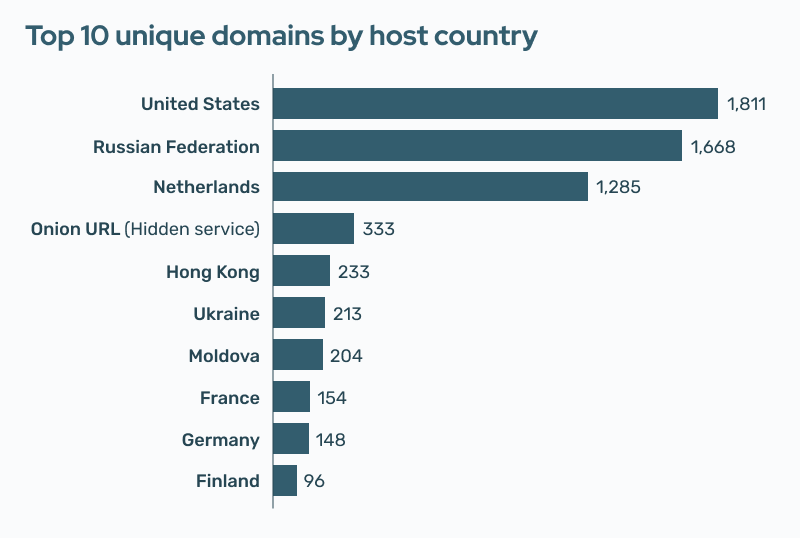

Geographic location can provide some general indication of your platform’s risks of hosting CSAM. According to data from the IWF’s 2023 annual report, 64% of reports were found on servers located in Europe (including Russia and Turkey). Additionally, the IWF reports that the United States (1,811), the Russian Federation (1,668), and the Netherlands (1,285) had the highest volume of unique domains with actioned content.

Considering the number of unique child sexual abuse websites hosted in a country can provide a clear indication of the problem in that geography. If you host UGC and your domain is hosted in one of the 3 countries mentioned above, you may be at a greater risk of hosting CSAM.

4. Are you looking for scalable methods to enforce your policies?

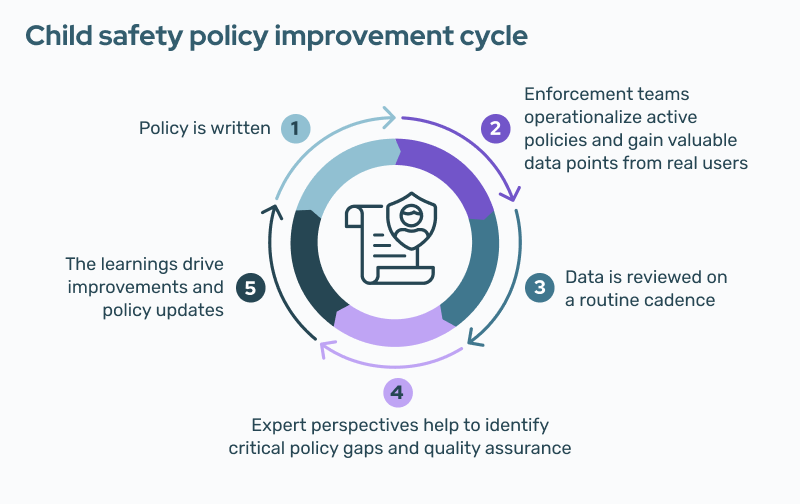

Trust and safety has emerged as a strategic differentiator for many companies. After defining your platform's policies, you’ll need to put practices in place to enforce those policies and develop a process for continuous policy improvement to keep pace with bad actors.

Each year, new social media and messaging apps pop up, along with new file sharing services and communities where CSAM can potentially take hold. Down the road, the differentiator between these startups and their established, market-leading competitors may be policy enforcement. Users and advertisers expect platforms to be safe and free of harmful content.

A Proactive Approach

Digital platforms are uniquely positioned to have a significant impact in the fight against CSAM. Trust and safety teams are able to remove this material from circulation before it can do additional harm. By implementing proactive detection solutions, content-hosting platforms can help make the internet a safer place for everyone.

Continue exploring this series:

Introduction

Begin here.

What is CSAM? Common terms and definitions

Understand key terms and phrases.

Online child sexual abuse and exploitation statistics

Understand the scale of the issue.

Know the signs and risks of hosting CSAM

4 questions to help assess your platform’s risk.

Hash matching methods for CSAM detection

Understand the use case for available hashing methods.

CSAM Classifiers: Specialized detection methods

Understand the need for specialized CSAM detection.

Scene-sensitive video hashing for CSAM video detection

Understand how to detect CSAM within video content.

CSAM hash sharing across digital platforms

Learn about the available CSAM hash lists.