Child sexual abuse and exploitation has evolved in the digital age. Decades ago, distribution of a child’s sexual abuse material was slower and less expansive, often transmitted via direct exchange among networks, illegal storefronts, or through the postal service. However, the emergence of the internet in the ‘90s and early 2000s removed many of the barriers to accessing this content.

As technology developed and advanced, child sexual abuse and exploitation adapted. Online risks have amplified as bad actors exploit the latest tools, such as generative AI, to scale sexual harms against children.

The full scale of online child sexual abuse and exploitation is hard to quantify, with estimates only reflective of the material we have discovered. The statistics included here serve only as indicators of the scale of the issue.

In 2023:

NCMEC

36 million+ reports of suspected child sexual exploitation reported to CyberTipline.

104 million+ files related to CSAM were reported by registered electronic service providers.

IWF

392,665 reports suspected to contain child sexual abuse imagery.

Cybertip.ca

203,436 URLs included in 28,116 reports received from the public.

INHOPE member hotlines

780,000 content URLs of potential illegal and harmful material, depicting child sexual abuse and exploitation.

Levels of abuse & victim demographics

CSAM is the result of a spectrum of abuse types but very often depicts extreme levels of violence and brutality involving young children — sometimes so young, they are still preverbal. The types of abuse depicted can be described in four categories:

- 1 - nudity or erotic posing with no sexual activity

- 2 - non-penetrative sexual activity between children or adults and children, or masturbation

- 3 - penetrative sexual activity between adults and children

- 4 - sadism or bestiality

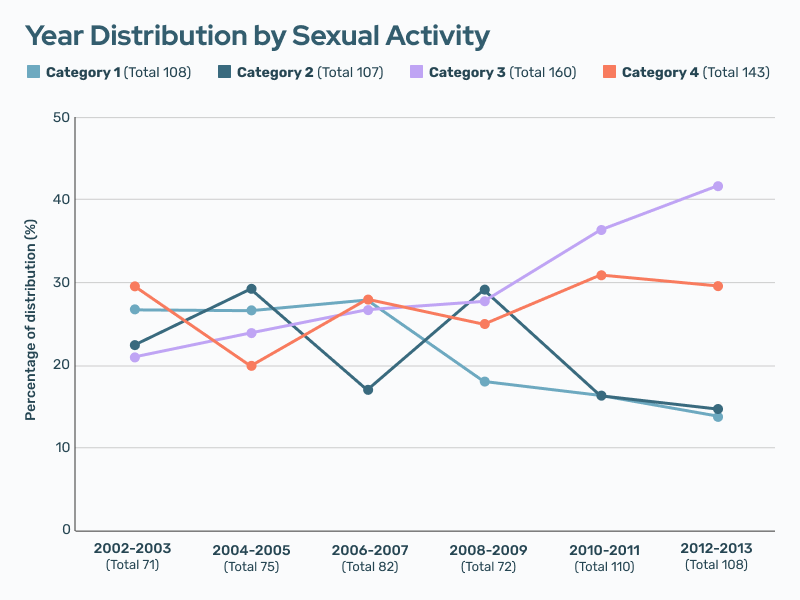

Unfortunately, there has been an apparent trend toward more violent and extreme material over time.

This table shows the percentage of NCMEC’s actively traded cases that fall within each abuse category over time. The category for a case is determined by the highest level of abuse depicted in a series of images. The increased prevalence of cases categorized as 3 and 4 indicates an increase in the amount of violent and extreme material in circulation.

Trends in age & gender

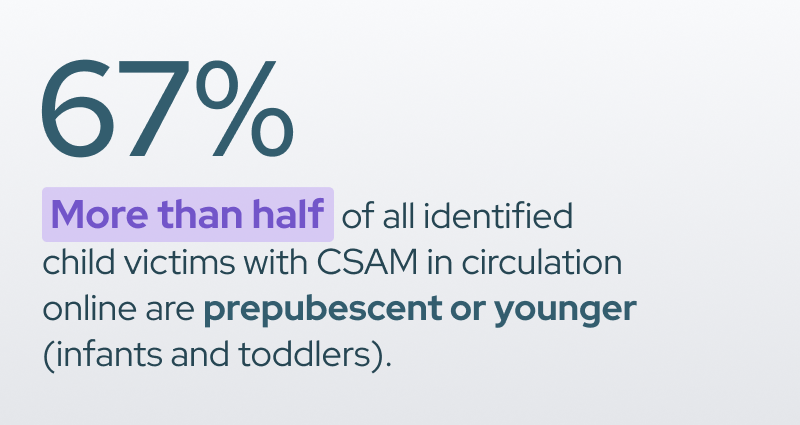

CSAM exists to depict the exploitation and abuse of children of all ages and genders. Based on available data, CSAM in circulation is more likely to show prepubescent children than older minors.

CSAM is more likely to depict girls than boys, according to the 2022 records of identified victims known to NCMEC’s Child Victim Identification Program (CVIP). However, according to a study conducted by INTERPOL & ECPAT, male victims are more likely to be seen in imagery with higher levels of violence than female victims.

In 2023:

IWF

2 million+ images and videos of victims depicted are estimated to be 3-13 years old.

INHOPE member hotlines

83% of CSAM victims depicted are aged 3-13.

95% of CSAM was showing female victims.

Access & opportunity

While offline access is the primary way abusers find the children they abuse and record in CSAM, the increasing role of technology and the internet in our lives has opened up new ways to target, groom, and exploit young people.

Now, someone seeking to abuse a child does not need to be in the same room with them. They simply need to be connected via the internet.

Online Enticement and Grooming

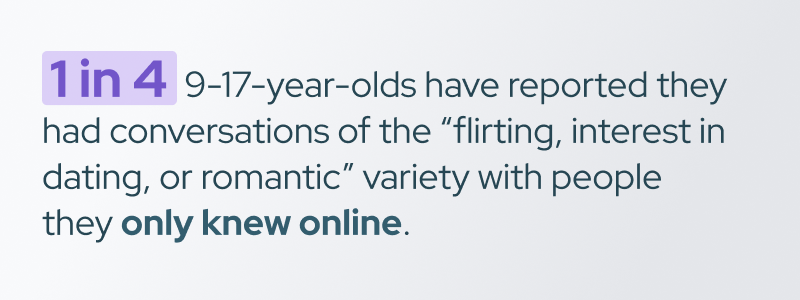

As many young people are finding new friendships online, it’s also viewed as a place for romance and flirtation. Unfortunately, online flirtation is not always limited to similarly aged peers. Many minors report finding it normal to flirt online outside of their age group, and for some, with much older adults.

Online grooming

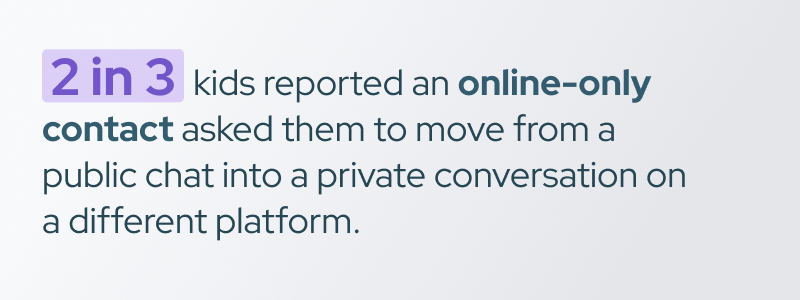

Many of the same tactics used for in-person grooming are also employed online. Over time, the predator builds rapport with both the child and the adults surrounding them, gradually isolating them for abuse. Abusers leverage popular online spaces to target potential victims and build trust, including many of the most popular social media and gaming forums. Any platform designed for connecting or uploading content is a vulnerable environment to online perpetrators.

Upon establishing a connection, potential victims are often moved to more private settings (DMs, encrypted messaging apps, etc.), leaving the protections of public digital spaces and more prominent content moderation.

In 2023:

NCMEC

300% increase in reports of online enticement from 44,155 in 2021 to 186,819 in 2023.

Cybertip.ca

14% of reports pertained to incidents on mobile devices, chat, instant messaging, or online gaming platforms.

Minors do not always view a sexual interaction with an adult (or even another minor significantly older than them) as fundamentally harmful or high risk. More than 1 in 5 minors report having had some type of online sexual interaction with someone they believe to be an adult. These interactions can include experiences such as being solicited for nudes (SG-CSAM), being sent explicit messages, or being sent explicit images by someone else.

Self-generated child sexual abuse material (SG-CSAM)

CSAM produced without any clear offender present in or to take the images is considered SG-CSAM.

These images or videos result from multiple sources, such as:

- A romantic exchange with a friend from school

- A child being groomed and extorted to take explicit images of themselves at the demand of an offender online

- A screen capture of a live stream

Regardless of how these materials are produced, they are classified as CSAM. Once distributed, these images can be weaponized to manipulate the child, potentially for obtaining more images, arranging a physical meeting, or extorting money. Additionally, these images can circulate widely, catering to those seeking specific fantasies. Predators might also use these images to groom other potential victims.

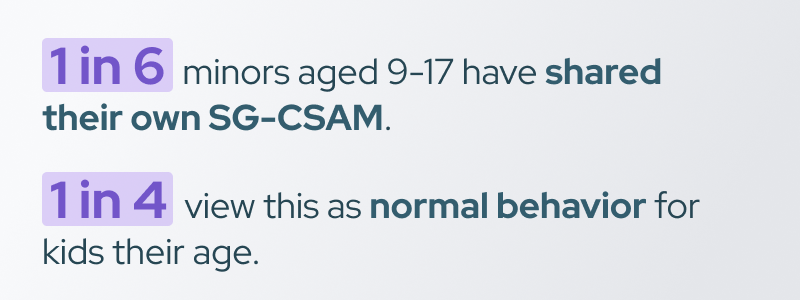

While these behaviors are more common among teenagers, 1 in 7 9-12-year-olds still say they have shared their own SG-CSAM.

It is slightly more common for SG-CSAM to be shared as part of an offline relationship. However, roughly 40% of kids who have shared SG-CSAM say they have done it with someone they only know online.

In 2023:

IWF

92% of content removed contained "self-generated" child sexual abuse material

INHOPE member hotlines

16% of content depicted 14–17-year-olds (believed to be related to non-consensual intimate image (NCII) abuse). Self-generated CSAM content figures remained consistently high according to hotline analysts, mirroring trends from previous years.

AI-generated child sexual abuse material (AIG-CSAM)

As technology continues to evolve, so too are the avenues of online child sexual abuse and the production of CSAM. Generative AI technologies are the latest example of this.

This technology’s use in everyday life is still in its early stages, but it has quickly become a mechanism through which to abuse kids.

In 2023:

NCMEC

4,700 reports of CSAM or other sexually exploitative content related to generative AI were received by CyberTipline.

More than 70% of GAI CSAM reports submitted to the CyberTipline are from traditional online platforms.

While currently responsible for a small percentage of reported CSAM, generative AI models are already being manipulated to produce custom CSAM. This is being used to generate new material of historical survivors, and custom material of specific kids from benign imagery for the purposes of fantasy, extortion, and, in some cases, peer-based bullying within schools and communities.

AI and Sextortion

Worryingly, cases are emerging in which generative AI technology is being used to produce explicit images with which a victim is threatened. According to Thorn’s recent Financial Sextortion report, in 11% of sextortion reports that included information about how images were acquired, victims report that they did not send sexual imagery of themselves but were threatened with images that were in some way fake or inauthentic.

What’s next?

CSAM, in all its forms, is often the only clue available to locate a child and identify their abuser. Relying solely on user reports could mean these images may not be detected by law enforcement until months or years after being recorded, leaving the child at risk for continued abuse.

This complex issue calls for a combination of mitigations—from proactive CSAM and CSE detection technologies to in-platform preventions and interventions.

Continue exploring this series:

Introduction

Begin here.

What is CSAM? Common terms and definitions

Understand key terms and phrases.

Online child sexual abuse and exploitation statistics

Understand the scale of the issue.

Know the signs and risks of hosting CSAM

4 questions to help assess your platform’s risk.

Hash matching methods for CSAM detection

Understand the use case for available hashing methods.

CSAM Classifiers: Specialized detection methods

Understand the need for specialized CSAM detection.

Scene-sensitive video hashing for CSAM video detection

Understand how to detect CSAM within video content.

CSAM hash sharing across digital platforms

Learn about the available CSAM hash lists.